+ WHO?

Abel Korinsky

ReSilence Partners

+ WHAT?

Surveillance and scoring systems shaping daily life; a European Social Credit System using sound to reveal hidden data and question technology’s control.

+ HOW?

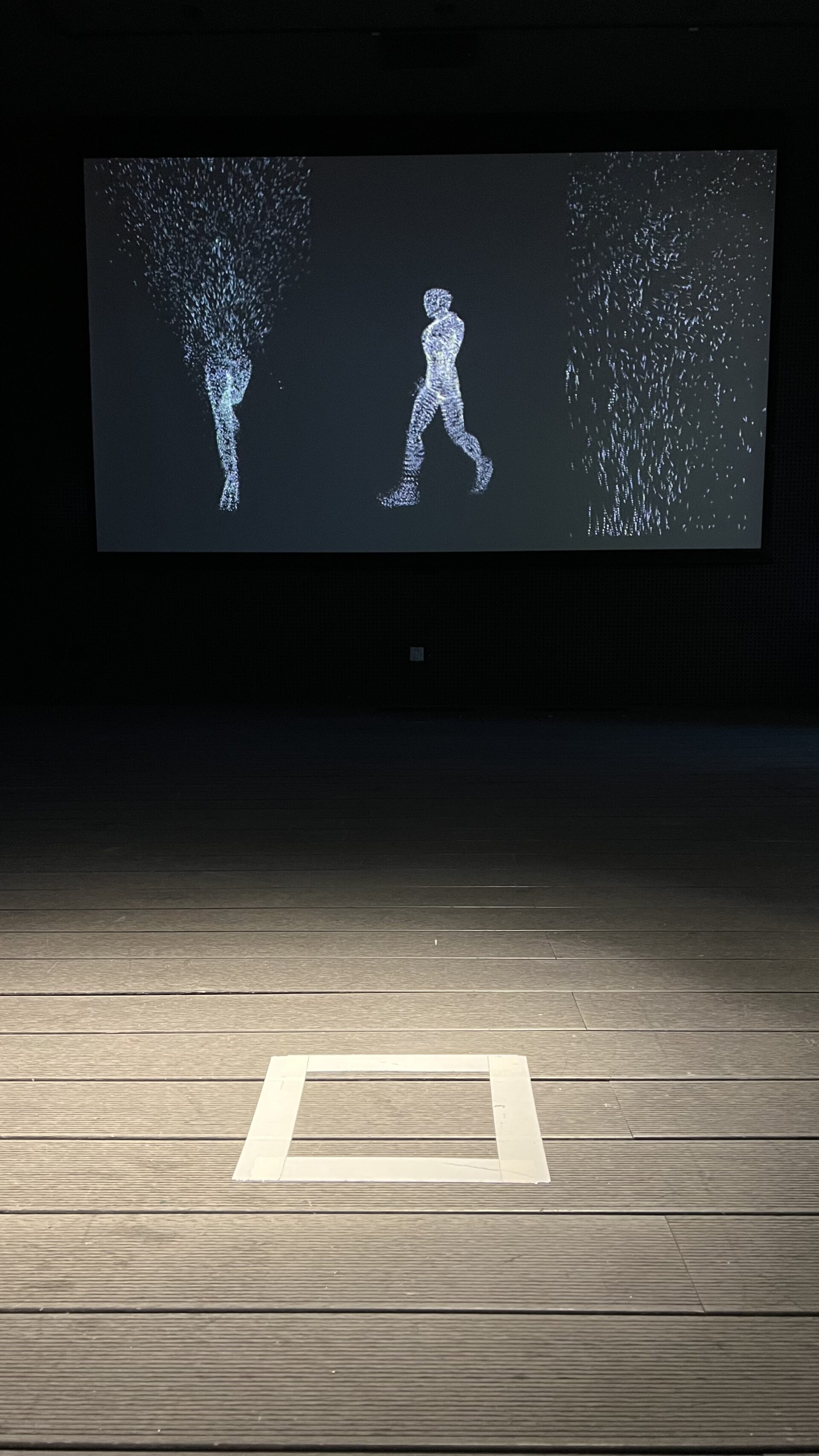

Tracking movement and behavior in real time, turning them into sound through AI, with “behavioral avatars” shaping a public Karma Scoreboard.

The work explores a sound-based system for analyzing and reflecting on both urban and indoor spaces. Using ultrasound directional speakers and AI technologies, it responds to people’s movements, providing sonic feedback generated from real-time analysis of appearance and behavior.

At its core lies a speculative scenario: a future shaped by an Environmental Karma System—an EU-driven Social Credit framework imagined to encourage sustainable, inclusive, and culturally aware behavior. Inspired by China’s Social Credit System and the U.S. Signature Strikes, the project envisions a Virtue Analysis Network (VAN) that transforms visual surveillance into an auditory experience, producing personalized sonic narratives instead of relying on confirmed identities.

Challenges Addressed

The work critically examines:

- The expanding role of AI in urban surveillance

- Algorithmic bias and the risks of behavioral scoring

- Legal and ethical tensions around GDPR, discrimination, and re-identification

By exposing these issues, it raises questions of who is seen—and who is heard—in public space, and how automated systems may encode hidden biases.

Urban Engagement

Sound becomes an interactive medium to reveal the often-invisible dynamics of surveillance. As individuals move through space, ultrasonic phased arrays generate personalized sound “DNA” tied to their visual profile.

In this way, the work highlights how cities increasingly monitor, influence, and sort individuals—while also confronting audiences with the cultural and ethical consequences of living under constant algorithmic observation.

Collaborations

– UpnaLab (Pamplona): hardware prototyping and beam steering expertise.

– KDE Group: support with tracking logic and computer vision system tuning.

Surveillance Aesthetics, Algorithmic Bias Urban Interaction, Phased Array Sound, Speculative Design, AI-Generated Narratives, Behavioral Profiling, Ethics of Recognition Technologies

Device-Making Guides

Hardware-Focused Description (Device-Making & Beam Steering)

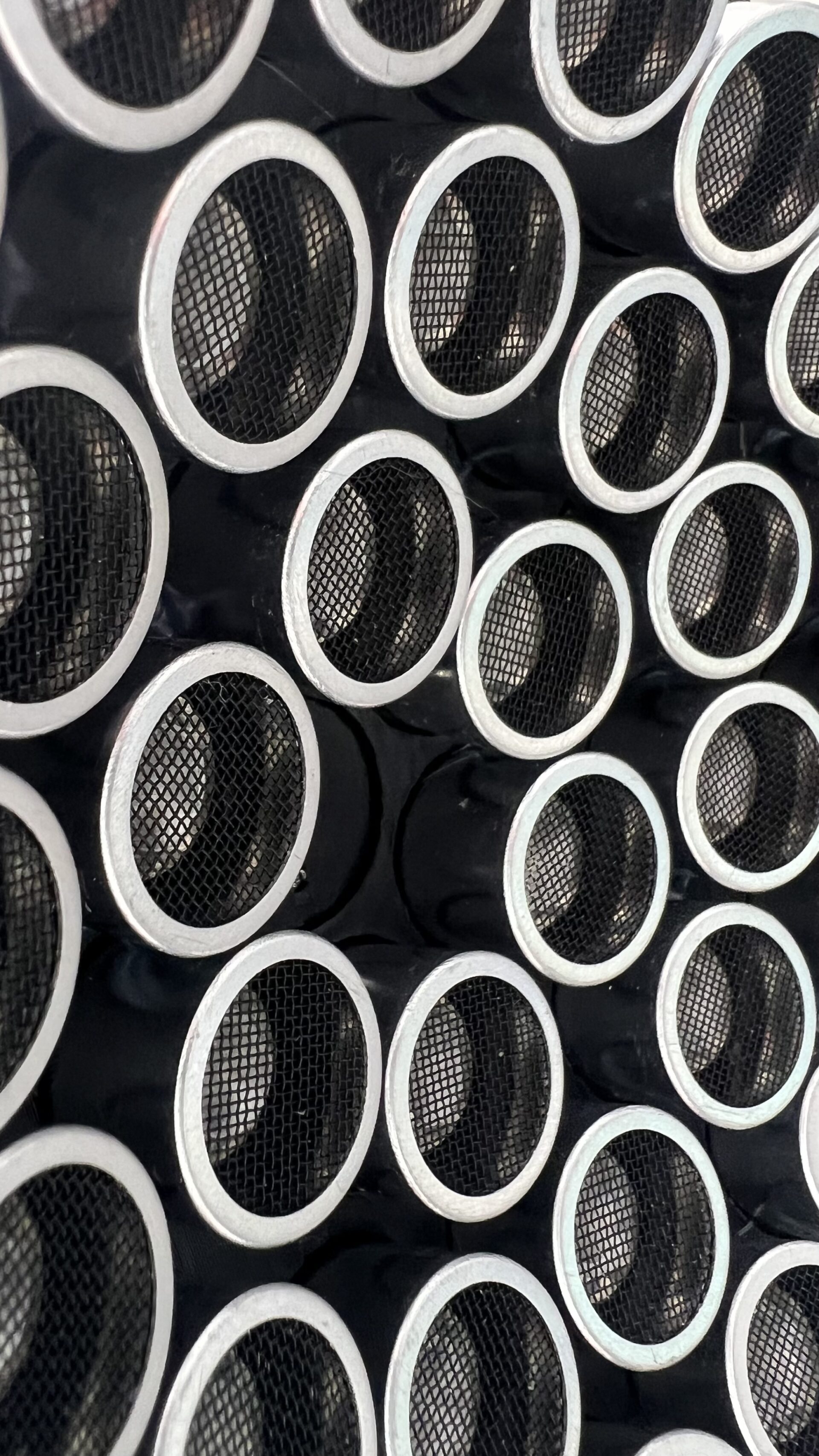

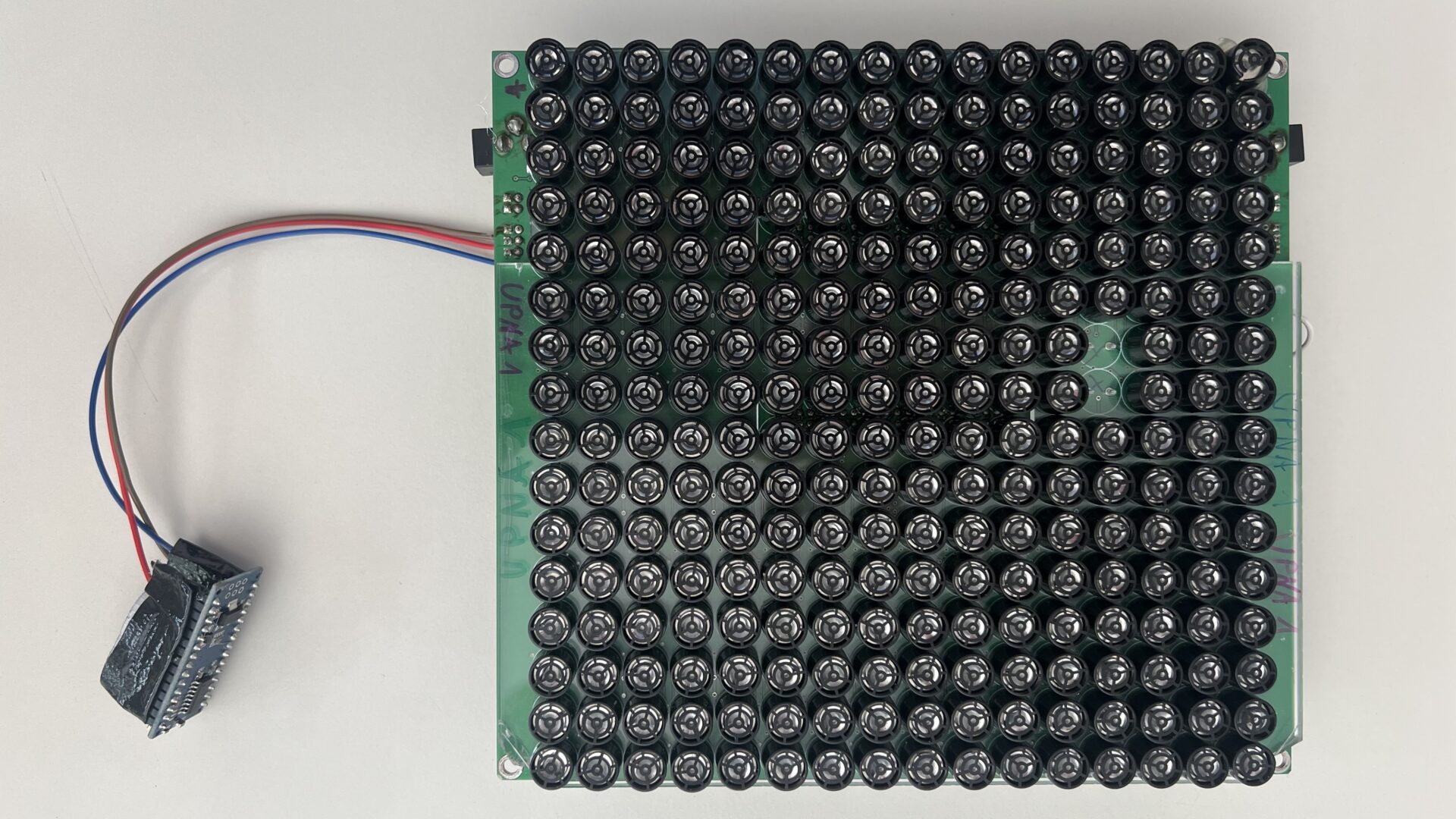

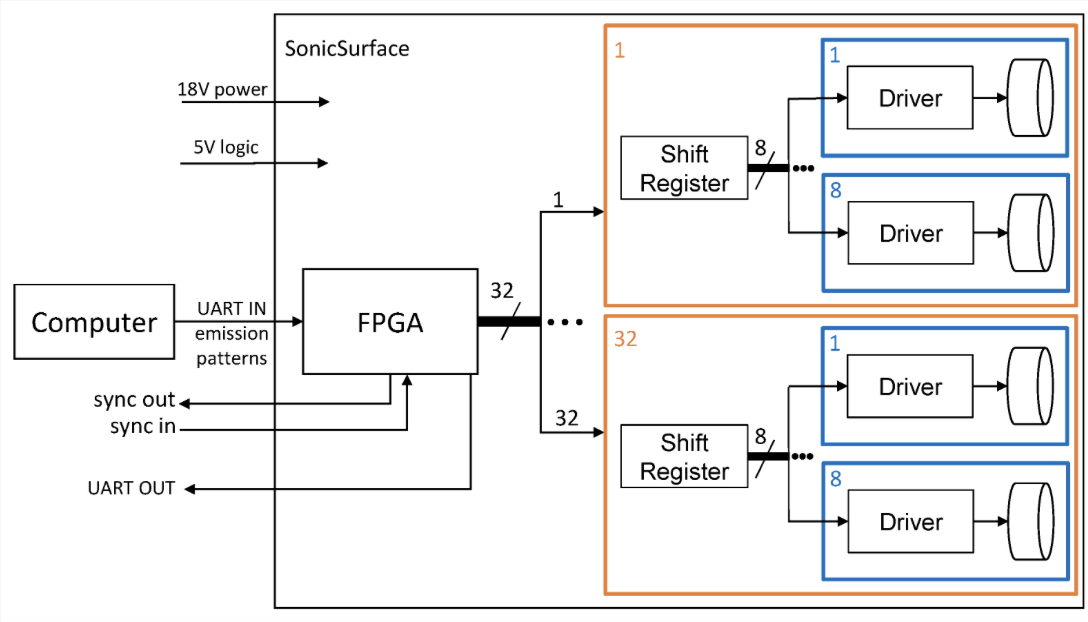

At the core of the installation is a custom-built ultrasonic phased array loudspeaker capable of directional beam steering. The device emits focused sound waves that can target individuals precisely in public spaces without spilling into the broader environment. It was co-developed with UpnaLab Pamplona, who contributed expertise in ultrasonic transducer design and beamforming control. The hardware includes:

- A modular transducer grid on an adjustable frame.

- Integrated amplifiers and a microcontroller interface for real-time beam control.

- Calibration tools aligning the speaker’s projection field with camera coordinates.

A detailed Device-Making Guide documents each phase—from component sourcing to assembly and alignment—ensuring reproducibility and transparency for future adaptations or public installations. Integration with real-time camera systems required algorithms converting 2D screen coordinates into 3D angular data for precise sound targeting.

Interactive Modules & Web Tools

Software-Focused Description (System Architecture & Intelligence)

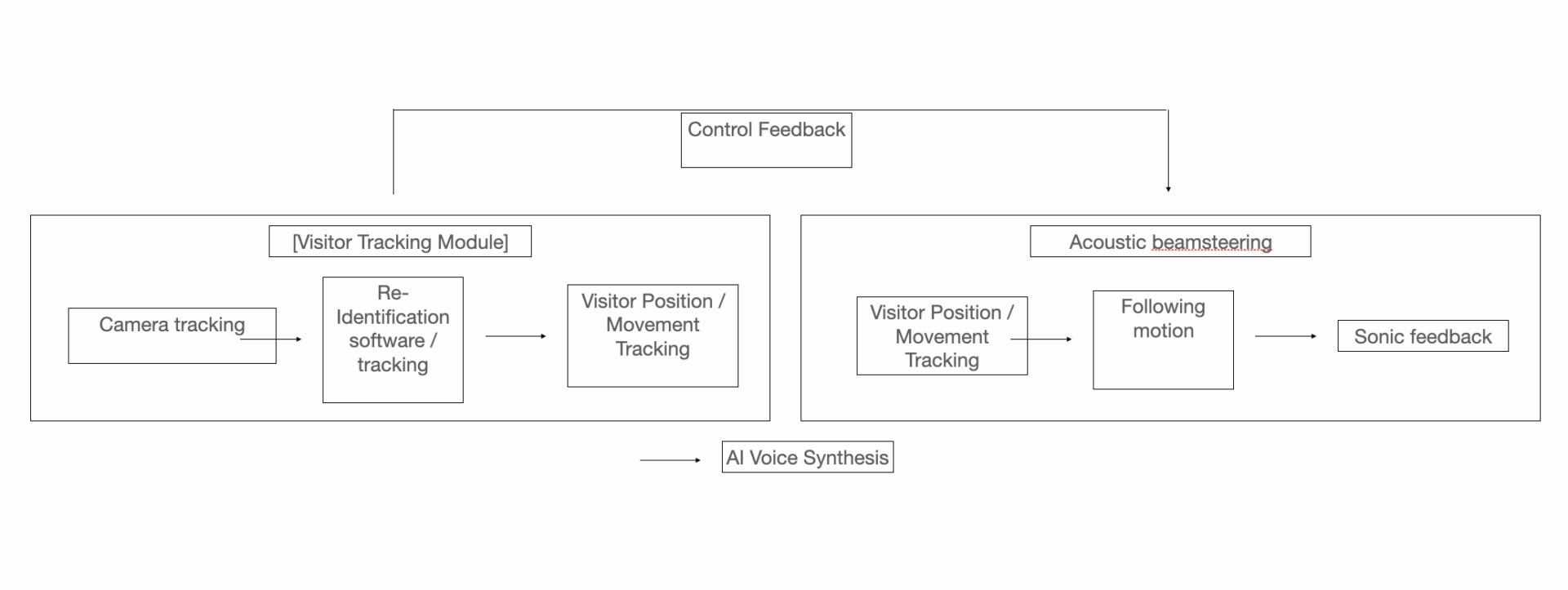

The software, built in Python, consists of four interlinked modules:

- Detection & Tracking Module

– Uses YOLOv8 for state-of-the-art object detection focused on pedestrians.

– Employs ByteTrack for multi-object tracking, ensuring reliable person IDs even in crowded scenes.

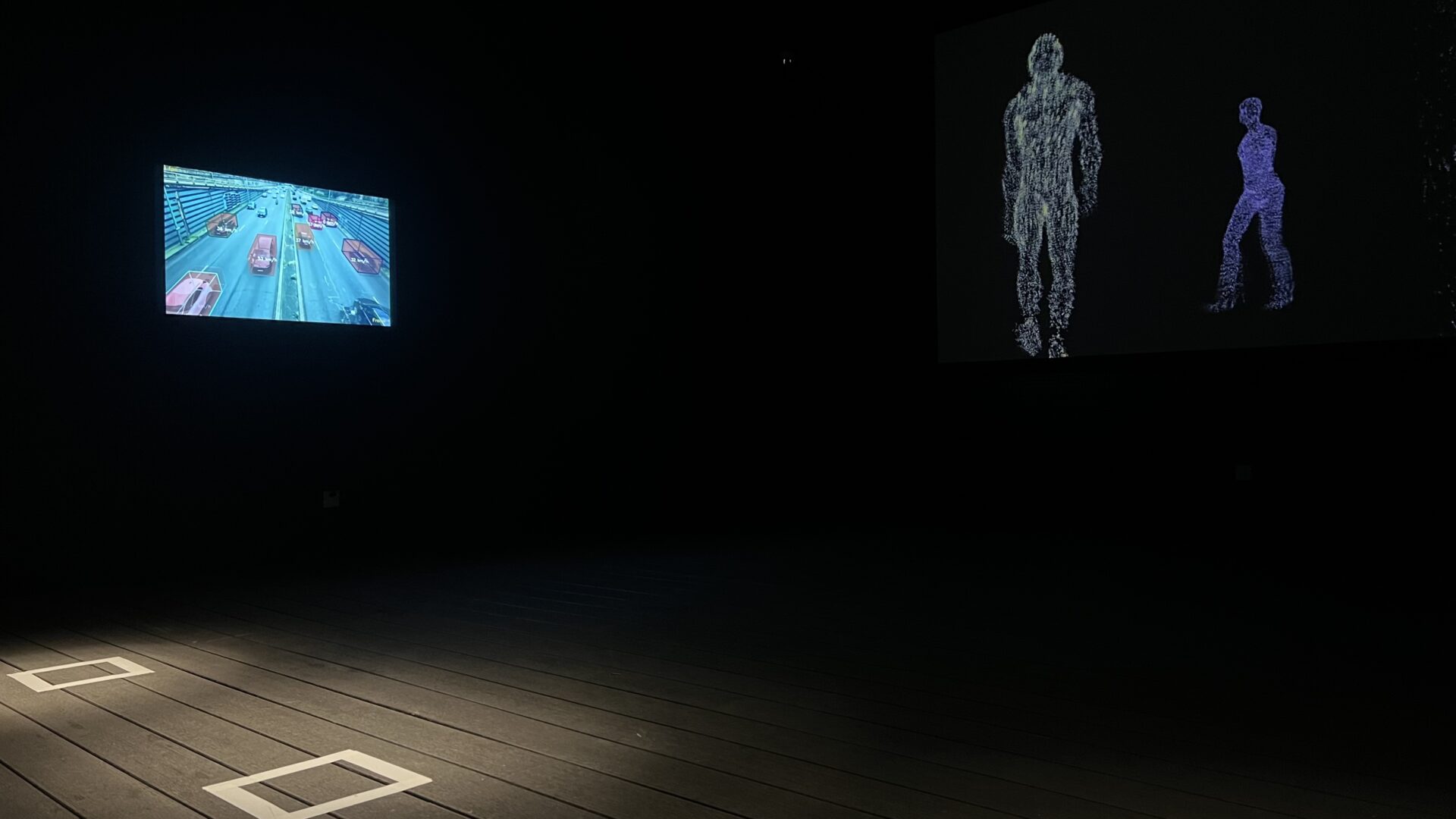

– Displays live tracking overlays and randomly selects targets on Monitor 1. - Audio Targeting Module

– Synchronizes tracking data with ultrasonic beam steering.

– Converts camera-detected coordinates into real-world angles to aim sound precisely. - Person Analysis Module

– Captures snapshots of selected individuals.

– Analyzes features using local AI models (for privacy): race, age, gender, clothing, expression.

– All processing is done locally to comply with GDPR principles. - Story Generation Module

– Uses a locally hosted language model to create speculative micro-narratives.

– Outputs synthesized voice and visual display on Monitor 2.

Diagrams of the system, showing its hardware architecture and the control feedback

Scenarios and Experiments:

Public Pilot – Goethe-Institut Beijing (China)

The system was exhibited in a public setting and will also be presented during the Art Week in Shanghai (November 2025). A directional ultrasonic loudspeaker was installed in the space. As people walked through, a camera tracked their movements and delivered personalized sound narratives directly to their ears via beam steering. Participants were initially unaware of the setup, which emphasized the subtle yet powerful role of sound as an intervention in public space.

Automated Vehicle Simulation

In collaboration with sound design students, the installation was tested on a stationary mock-up vehicle. A phased-array loudspeaker was mounted on the car, identifying pedestrians as they entered a defined zone. Directional sound was then used to either warn them of “approaching” vehicles or deliver contextual messages. This scenario illustrated the potential of targeted acoustic alerts for traffic safety in future urban environments.

In both cases, the system combined real-time tracking, local AI analysis, and narrative voice synthesis. The experiments demonstrated how sound can become a new medium for urban communication—moving beyond alarms and advertisements toward more nuanced, context-aware interactions.

Lessons learned:

The installation demonstrated how sound can be used to personalize urban space—but it also raised urgent questions of privacy, data usage, and consent. By shifting attention from the visual to the auditory, it triggered a conversation about possible alternatives to visual surveillance and revealed the risks embedded in systems that claim neutrality.

In simulations with vehicles, the work highlighted the potential of sound zoning as a tool for urban safety, while also stressing the need for precise sound localization in noisy environments. At the same time, it exposed how such technologies could intrude on people’s privacy—for example, by transforming visual material into sonic output. The challenge, therefore, is to approach such systems transparently and inclusively.

The project also pointed to the dangers of widespread cloud-based tracking applications, where sensitive information is stored online. In contrast, this work used local AI models that do not store personal data, but simply offer a critical glimpse into how AI is applied in detection, tracking, and analysis in urban space.

Artistic, Social, Technological, and Urban Benefits:

The project extends ReSilence’s urban pilot by using sound as a medium for reflection, interaction, and social commentary. Through real-time tracking, local AI analysis, and a phased-array ultrasonic speaker system, it introduces a new way of delivering non-intrusive, personalized sound in public space.

Artistically, it stages the politics of surveillance through speculative storytelling.

Socially, it invites people to engage directly with urban monitoring systems, making their invisible operations tangible.

Technologically, it pushes the integration of beam steering and local person analysis to create context-aware sonic responses.

In the urban dimension, it imagines cities that “speak” selectively and responsively—offering not only information, but also moments of poetic interruption.

Adaptability / interoperability:

Modular System Architecture

The system is designed as a modular and adaptable framework:

- Tracking, analysis, sound targeting, and story generation can be reconfigured or upgraded independently.

- Detection could shift to thermal imaging in privacy-sensitive contexts.

- Audio delivery could move from ultrasonic arrays to wearable devices.

- Built on open hardware and software, it enables artists, designers, and urban planners to extend and repurpose the tools in site-specific ways.

Thanks to its interoperability with smart city infrastructures, autonomous vehicles, and museum installations, the system supports a wide spectrum of applications—from safety and education to speculative artistic interventions in public space.

Impact

The project explores the synergy of hardware and AI to create a speculative surveillance environment where sound functions as algorithmic feedback. Through detection, targeting, and storytelling within an interactive system, it invites participants to reflect on privacy, public space, and the ethics of algorithmic judgment. At the same time, it proposes new uses of sound and sonic devices as vectors of visibility and control, critically engaging with the urban dimension.