+ WHO?

Max Planck Institute for Empirical Aesthetics (MPIEA)

Partner

+ WHAT?

Multimodal analysis of sound-related experience and behaviour.

+ HOW?

Modular System for Measuring and Analyzing Audience Engagement in Urban Sound Installations and Performances.

Background

The MPIEA’s research focuses on music experience design—understanding how the format, setting, and technological mediation of performances shape aesthetic experience. This includes live concerts in traditional and experimental formats, as well as technologically-mediated performances (livestreams, spatial audio, interactive installations, augmented performances). By systematically measuring physiological, behavioral, and subjective responses, we investigate what makes musical experiences engaging, moving, and meaningful across different presentation formats.

Within the ReSilence project, this expertise extends to urban soundscapes. As cities struggle with noise pollution and acoustic chaos, innovative sound design offers opportunities to transform urban environments into spaces that foster mental resilience, ecological awareness, and community interaction. The MPIEA’s ArtLab provides the scientific infrastructure to systematically measure whether and how artistic interventions—from interactive sound installations to reimagined concert formats—affect people’s experience and behavior in urban contexts.

The Challenge

Measuring aesthetic experience in performance settings requires balancing two competing demands: scientific rigor and ecological validity. Traditional laboratory methods offer precise control but lack the authenticity of real-world experiences. Conversely, field studies preserve naturalism but sacrifice measurement quality and experimental control.

Key challenges include:

- Data integration: Synchronizing heterogeneous data streams (physiological sensors, cameras, audio, self-reports) with millisecond precision across multiple participants

- Minimal intrusion: Collecting high-quality physiological and behavioral data without disrupting participants’ immersion in the experience

- Scalability: Supporting research from individual case studies to large audience experiments while maintaining data quality

- Transferability: Enabling measurements both in controlled lab settings and real-world urban environments

Core Concepts: Multimodal analysis, Aesthetic experience, Concert research, Urban soundscapes, Psychophysiology, Live performance

Technical: Biosignal measurement, Motion capture, Eye tracking, Lab Streaming Layer, Time synchronization, Real-time visualization

Methods: Ecological validity, Physiological synchronization, Behavioral observation, Self-report, Multimodal integration

Applications: Music perception, Sound art, Interactive installation, Sonic biomimetics, Urban planning, Soundscape design, Mental resilience, Community interaction

Software: Custom Open-Source Tools

Four interconnected applications (in preparation for open-source release) that both artists and researchers can use in live performance contexts:

ArtDAQ: The ArtDAQ is an acquisition platform that integrates multiple sensor types with real-time streaming, local buffering, and unified timestamping. Compatible with Lab Streaming Layer (LSL) and Open Sound Control (OSC) protocols.

- Central Synchronization: GPS-based master clock with stratum 1 precision distributing time signals via NTP/PTP, LTC, and MTC protocols for sub-millisecond alignment across all systems.

- PLUX-Raspberry Pi Biosignal System:Adapted to work with PLUX 8-channel biosignal devices (e.g. for heart rate, electrodermal activity, respiration, facial EMG) connected via USB to dedicated Raspberry Pi nodes. Each Raspberry Pi provides local data acquisition, timestamping, buffering, and wireless streaming to a central server. Battery-powered for mobile deployment. Scales from 5 to 90+ participants.

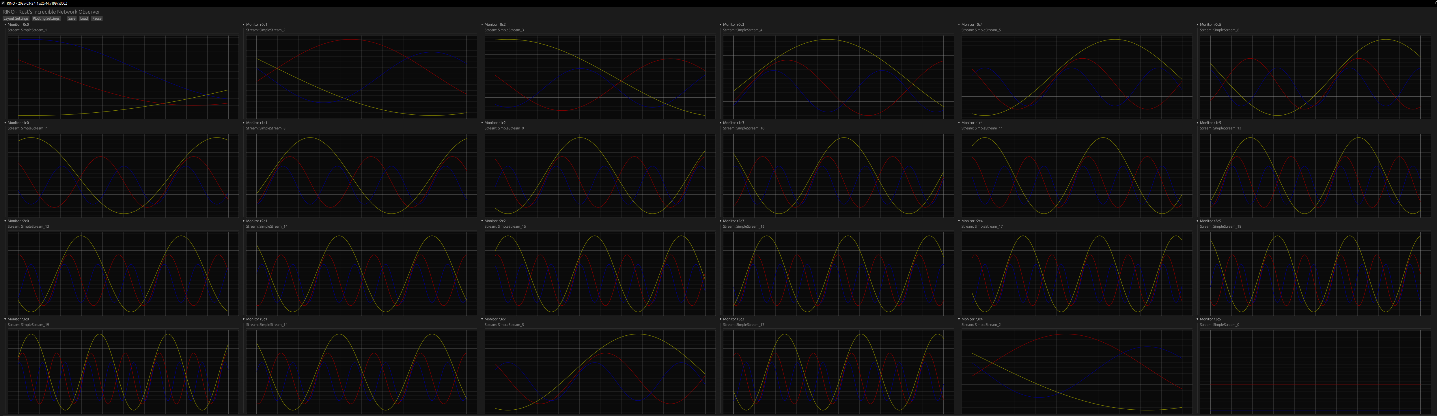

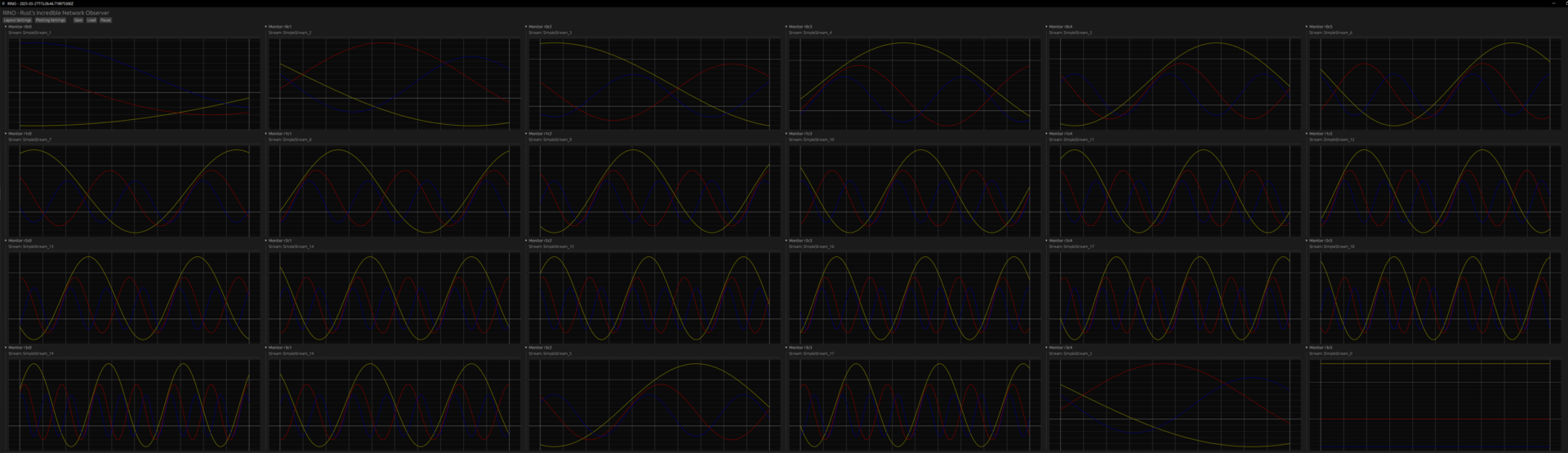

RINO: RINO is a high-performance software application designed for scalable, real-time visualization and storage of incoming data streams. Built for robustness and low latency, RINO can handle up to 150 concurrent streams, making it well suited for whole-audience data collection. It provides an overview of incoming signals, supports real-time inspection of signal quality, and forms a foundation for live feedback and adaptive experiments.

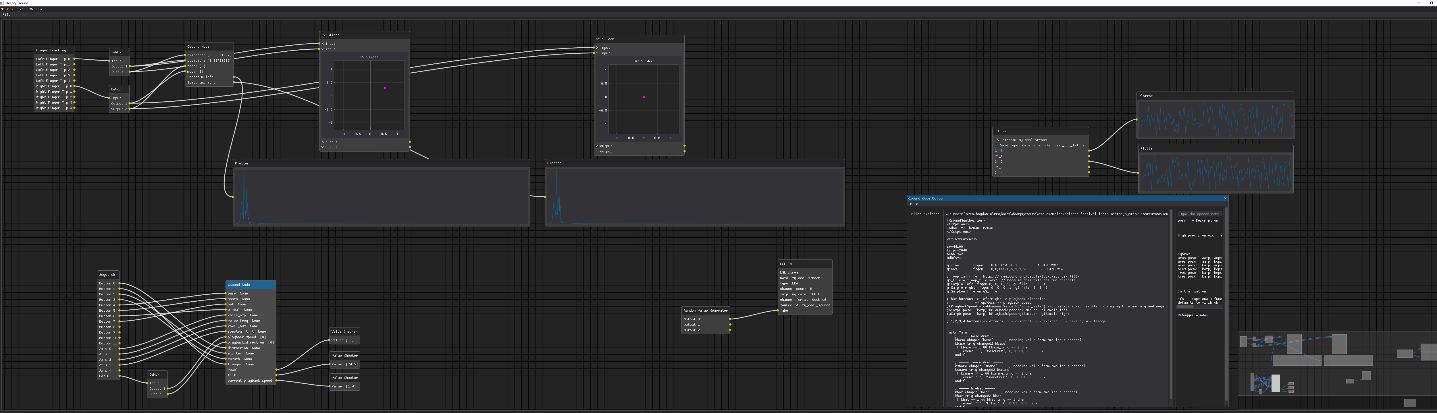

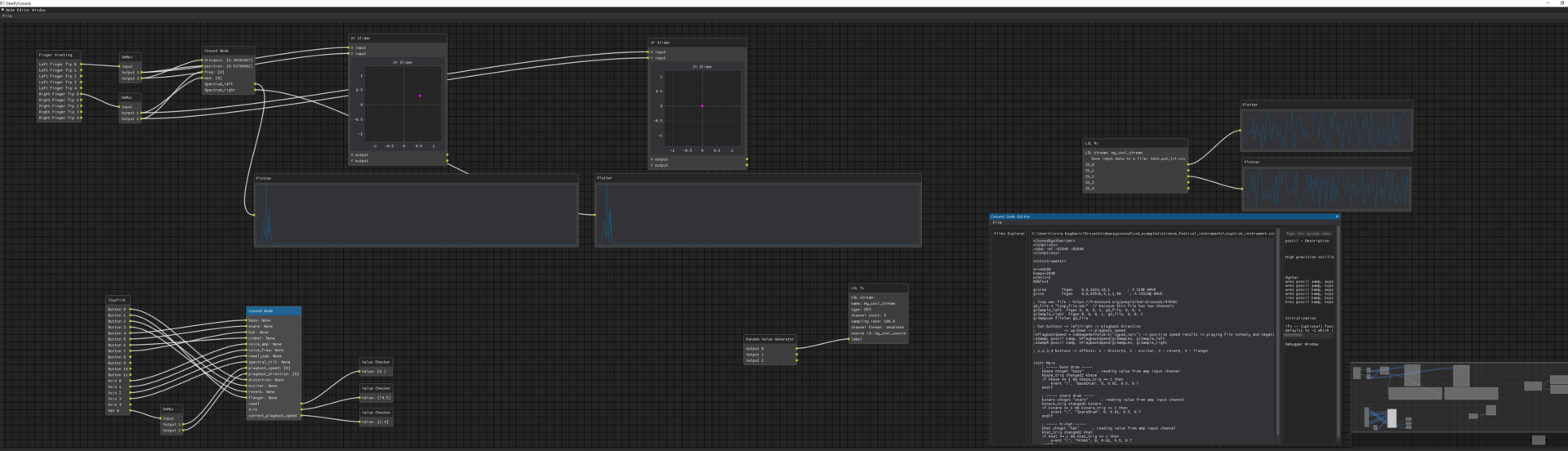

NERD: For smaller-scale or exploratory studies, NERD provides a highly flexible, node-based visual programming interface. It enables researchers to connect modular processing blocks to receive, process, convert, visualize, and store data streams in real time. Supporting a variety of data streaming protocols, NERD is well suited for setups involving up to 10 participants, prototyping of interactive systems, and custom data routing. Use cases include sonification of biosignals, gesture-based control using camera input, and buffered streaming of asynchronous signals to audio engines. NERD has proven effective in addressing the evolving needs of signal routing, format interoperability, and interactive experimentation in live and research-driven contexts.

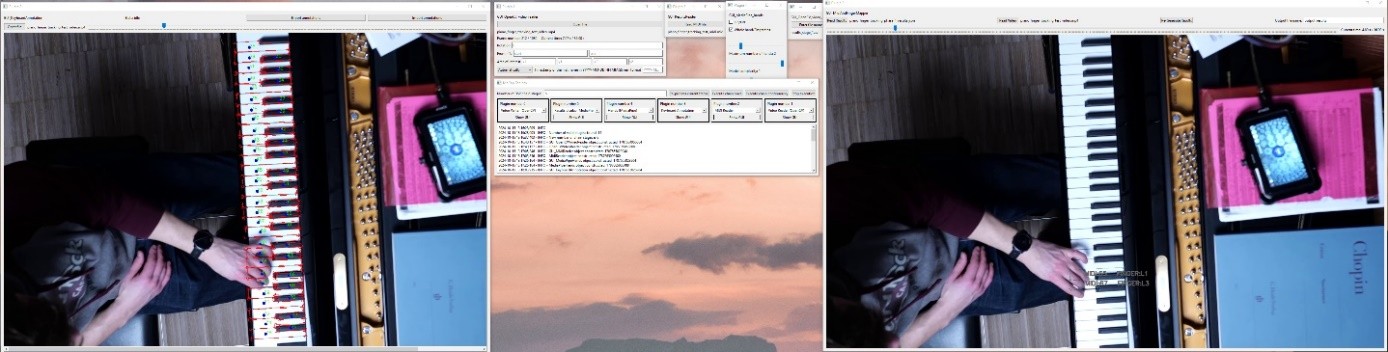

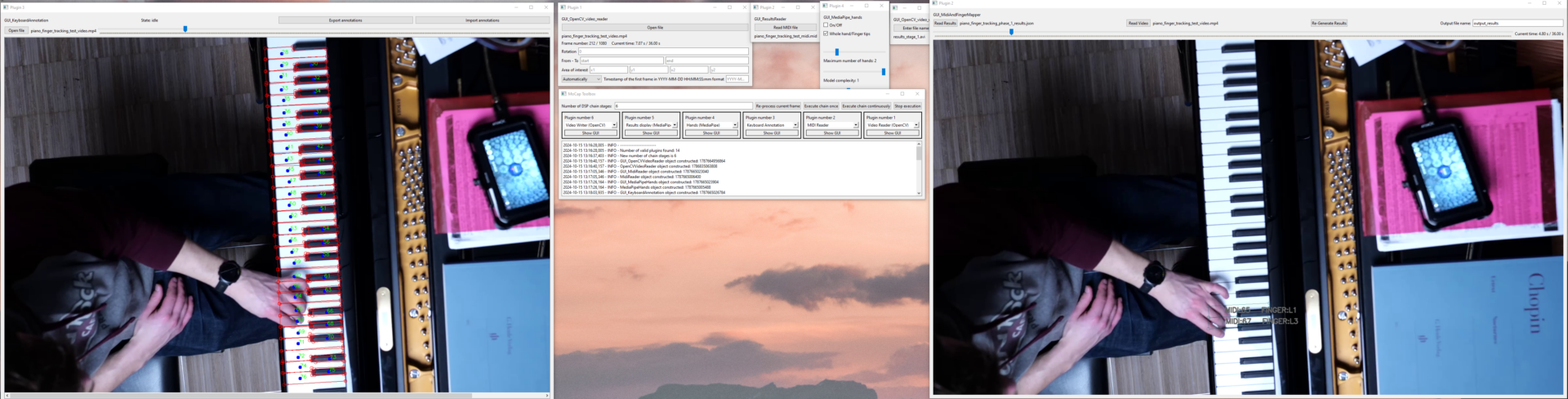

APAP: APAP is a modular framework that can be used for post-hoc signal analysis, multimodal data visualization, or real-time motion tracking in performance research. Built on a plugin-based digital signal processing architecture, APAP enables the alignment and exploration of diverse data types—such as audio, video, physiological signals, motion data, and annotations—on a shared, flexible timeline. It supports pose and gesture recognition, object and person tracking, and multi-camera synchronization, with a PyQt-based interface for video playback, annotation, and anonymization. By integrating motion data with synchronized biosignals (e.g., from PLUX devices), wearable sensors, and audio, APAP facilitates rich, time-aligned analysis of complex performance scenarios.

This infrastructure has been deployed in ReSilence artist collaborations and broader concert research:

Tim Otto Roth: “Theatre of Memory” – Audiovisual, spatial sound art installation exploring memory, sound, and urban space. The multimodal measurement captured how participants engaged with the immersive environment across physiological, behavioral, and subjective dimensions.

Paul Louis: “B:N:S – Biomimetic Sound Network” – Interactive installation reimagining urban soundscapes through sonic biomimetics. Physiological responses to soundscape transitions, spatial movement patterns, and self-reported experiences of transformation from pollution to symbiosis were measured.

Additional Deployments: Beyond ReSilence, the infrastructure supports concert research in Western classical music, jazz, rap, beatboxing, and Hindustani classical music, both in the ArtLab and at external venues (real concert halls, public spaces, online platforms)

Lessons learned:

Key Lessons from Implementation

– PLUX-Raspberry Pi integration overcomes Bluetooth scalability limitations while maintaining minimal intrusiveness through discreet device placement

– Centralized GPS-based time synchronization is essential for valid event-based analyses when integrating heterogeneous data sources

– Multimodal data integration (physiological, behavioral, self-report) provides richer insights than single measures, revealing how musical features and shared experiences manifest in bodily responses

– Iterative sensor optimization substantially reduces participant distraction: careful wearable design and automated synchronization preserve immersion while improving data reliability

– Real-time visualization enables quality monitoring and adaptive experimental control during data collection

Adaptability / interoperability:

Technical Adaptability

– Modular and scalable: From individual participants to 90+ audience members

– Mobile deployment: Battery-powered Raspberry Pi nodes enable research beyond the fixed auditorium in real concert venues and urban spaces

– Customizable to artistic concepts: Measurement approaches adapt to different performance genres, spatial configurations, and aesthetic requirements

– Interoperable protocols: LSL and OSC compatibility enables integration with diverse sensor types and third-party systems

Institution

Max Planck Institute for Empirical Aesthetics

Technical Partners

Software / Publications (forthcoming open-source release)

Related publication: Bogdanić, Stenschke et al. (in preparation)