+ WHAT?

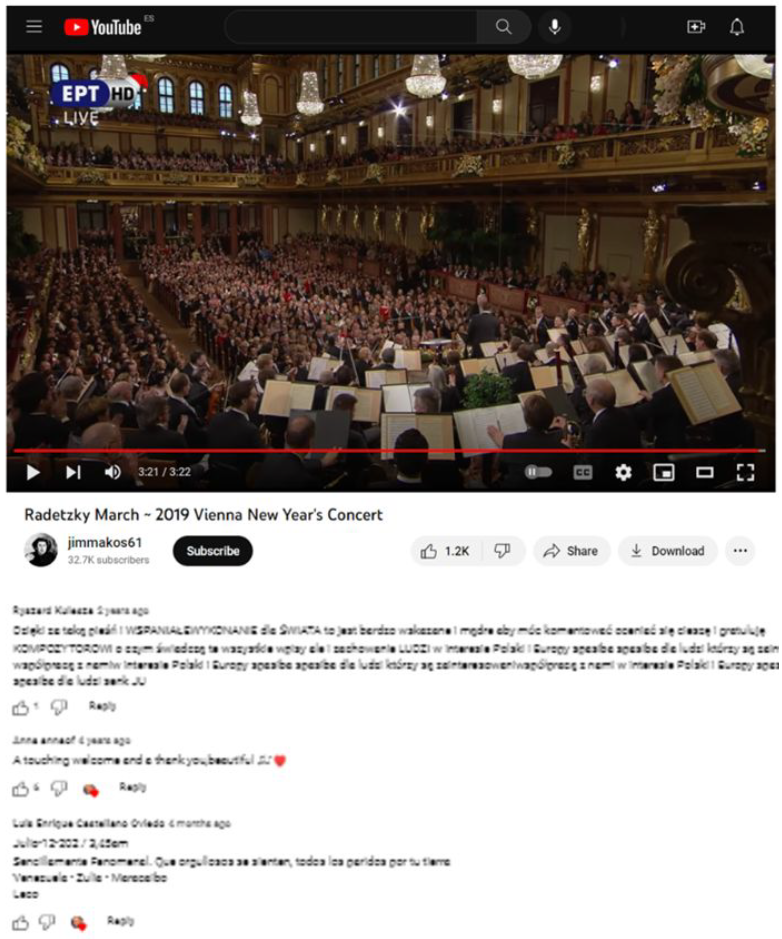

Sentiment analysis from crawled social media data

and reviews

+ HOW?

Fine-grained context-less and contextual emotion classification using transformer-based neural networks trained on synthetic data generated by large language models

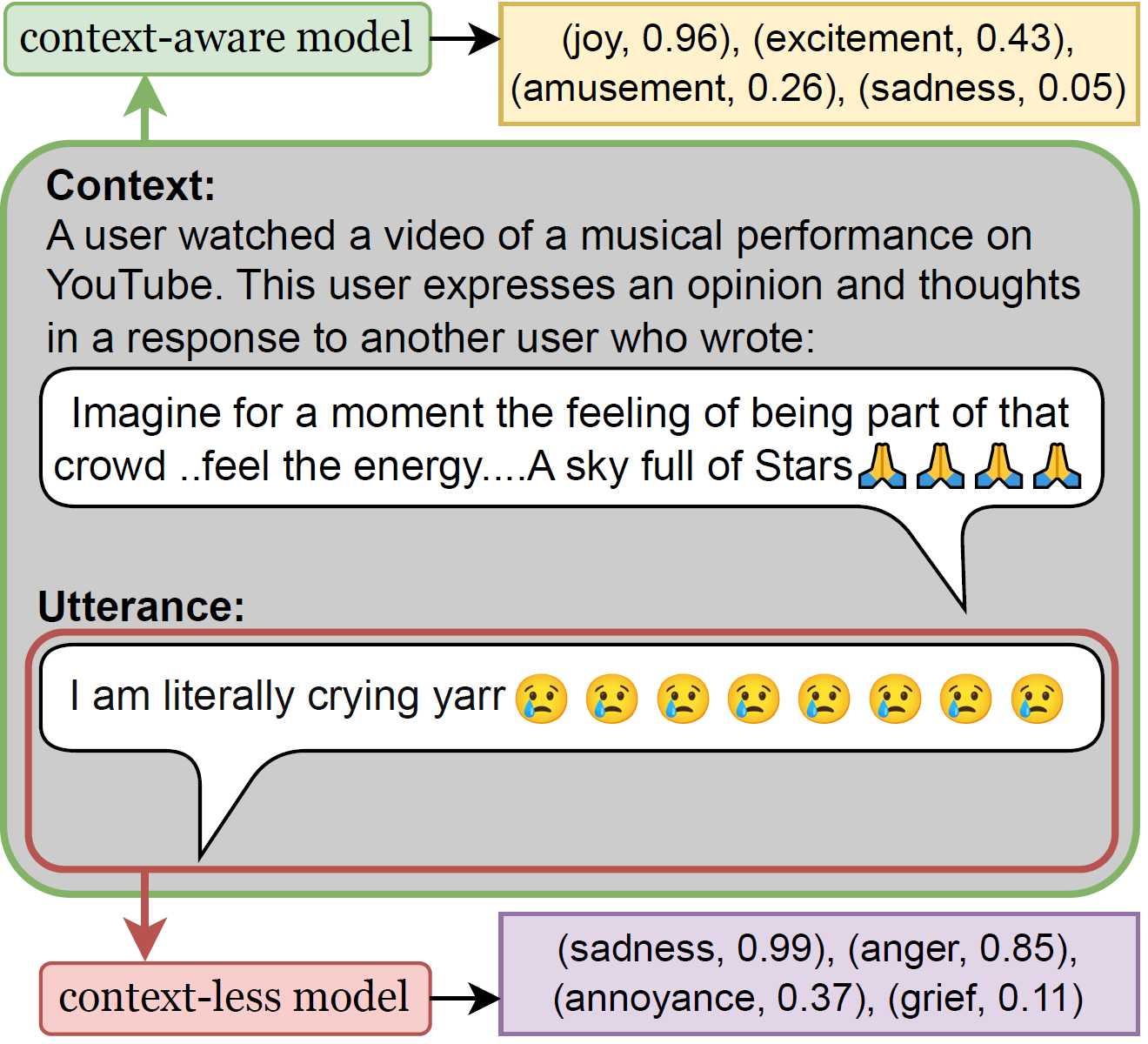

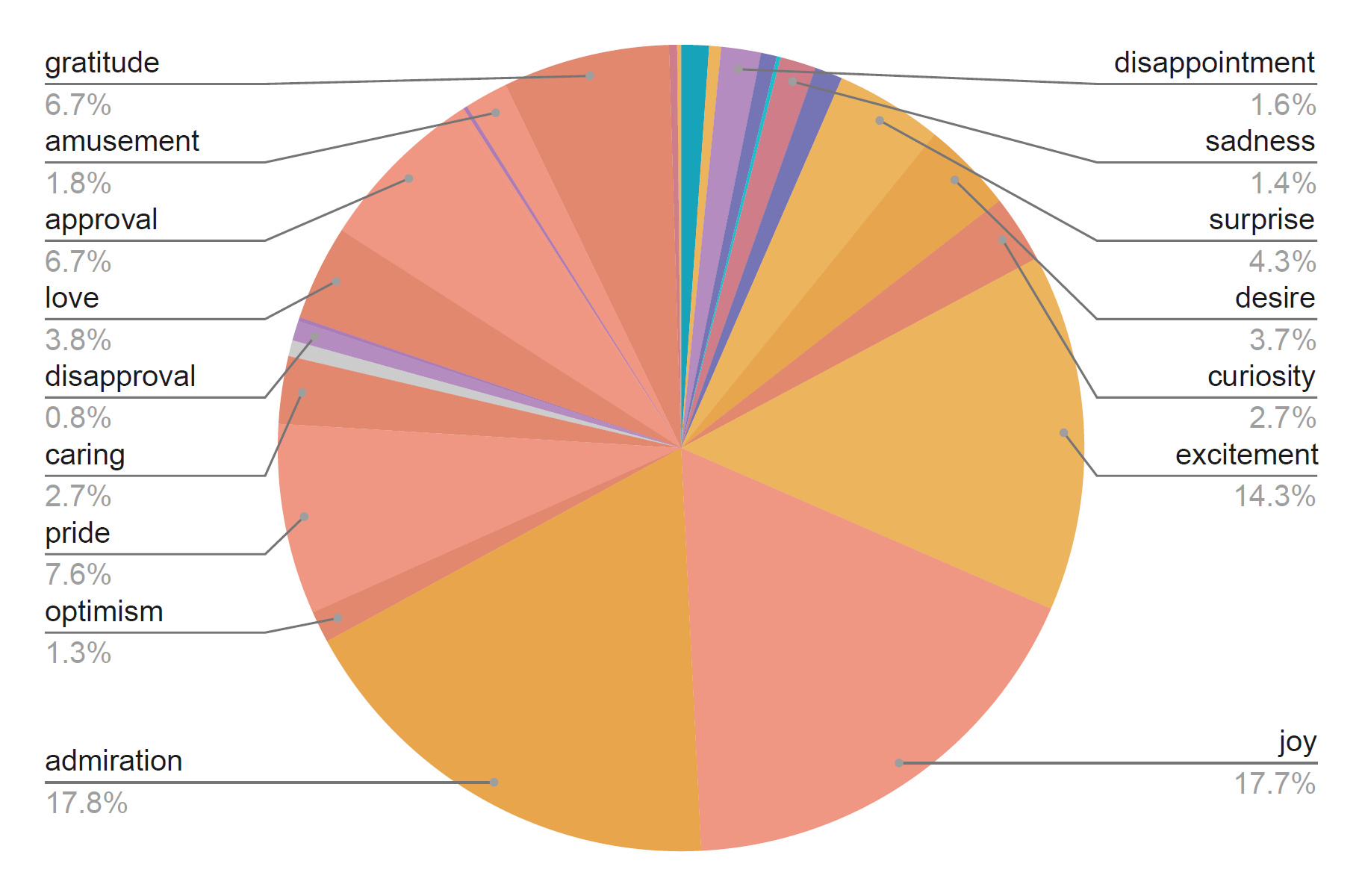

It is of high importance for creators of novel acoustic experiences to receive feedback from their audiences, including those in a virtual setting. Given user comments about the work on social media, it is desirable to automatically get a detailed view of people’s perceptions, including their explicitly expressed feelings. It is crucial to identify emotions within a wide spectrum of emotional categories, so as not to miss essential details that were communicated through language. As comments on social media are often brief, it is also important to properly situate them in context to avoid ambiguous emotional interpretations. To address this need in ReSilence, an Emo Pillars suite has been developed – a range of models for fine-grained emotion classification (with and without context), released along with the synthetic dataset on which they were trained. The Emo Pillars models are expected to be of high interest to various stakeholders in the concert creation industry, as well as to authors of artistic implementations.

fine-grained emotion classification, synthetic emotion dataset, context-aware models, multi-label classifiers, semantic diversity, BERT-based models, emotion analysis pipeline, user sentiment insights

Emo Pillars is a collection of neural multi-label classifiers for 28 emotional classes. The project includes:

- The Collection: A comprehensive collection of models.

- Dataset: This synthetic dataset is designed for fine-grained emotion classification. It’s created using a multi-step LLM-based pipeline detailed in the project’s paper.

Emo Pillars Models

The Emo Pillars collection contains several specialized models, with usage and evaluation details available on their respective pages:

- Context-Aware Models:

- 28 Emotional Classes: A multi-label classifier that takes context and a character description to extract emotions from an utterance.

- Fine-Tuned on EmoContext: A model fine-tuned on a 4-class EmoContext dataset (angry, sad, happy, and others). It can analyze either a context-plus-utterance or a three-turn dialogue.

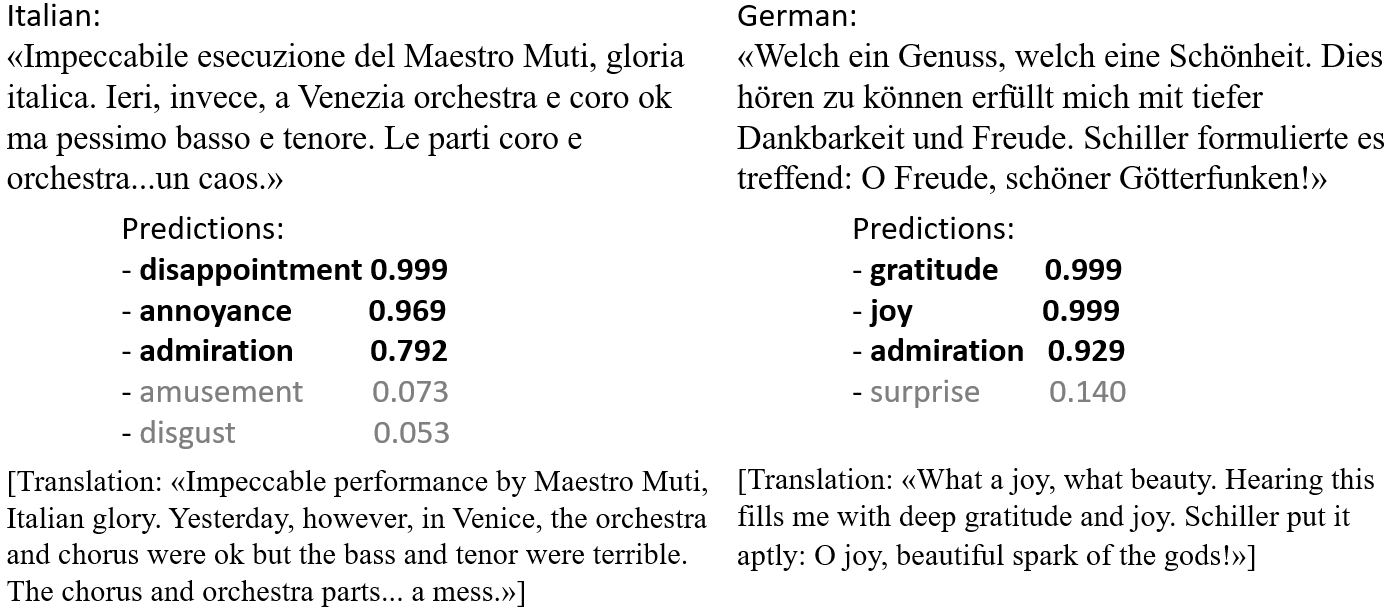

- Context-Less Models:

- 28 Emotional Classes: Detects emotions in the entire input, including any provided context.

- Fine-Tuned on GoEmotions: A version fine-tuned on the GoEmotions dataset, also for 28 classes.

- Fine-Tuned on 7 Classes: This model is fine-tuned on a 7-class dataset (anger, disgust, fear, sadness, joy, shame, guilt).

The Emo Pillars models, dataset, and the pipeline that generates diverse, labelled synthetic data by extracting knowledge from LLMs for fine-grained context-less and context-aware emotion classification were presented at the 63rd Annual Meeting of the Association for Computational Linguistics in July 2025 (ACL 2025, Vienna, Austria): https://2025.aclweb.org/

Lessons learned:

Main results and evaluations can be found in the paper published in Findings of the Association for Computational Linguistics (ACL 2025): https://aclanthology.org/2025.findings-acl.10/

Most datasets for sentiment analysis lack the context in which an opinion was expressed, which is often crucial for understanding emotions, and are mainly limited by a few emotion categories. Foundation large language models suffer from over-predicting emotions and are too resource-intensive. When used for synthetic data generation, the produced examples generally lack semantic diversity.

The Emo Pillars models are accessible, lightweight BERT-type encoder models trained on data from an LLM-based data synthesis pipeline, which focuses on expanding the semantic diversity of examples. The pipeline grounds emotional text generation in a corpus of narratives, resulting in non-repetitive utterances with unique contexts across 28 emotion classes. Emo Pillars also include task-specific models, derived from training the base models on specific downstream tasks. Evaluation scores demonstrate that the base models are highly adaptable to new domains.

Along with the models, a dataset of 100,000 contextual and 300,000 contextless examples is released for fine-tuning various types of pre-trained language models. The dataset has been validated through statistical analysis and human evaluation, confirming the success of measures in diversifying utterances and contexts.

Adaptability / interoperability:

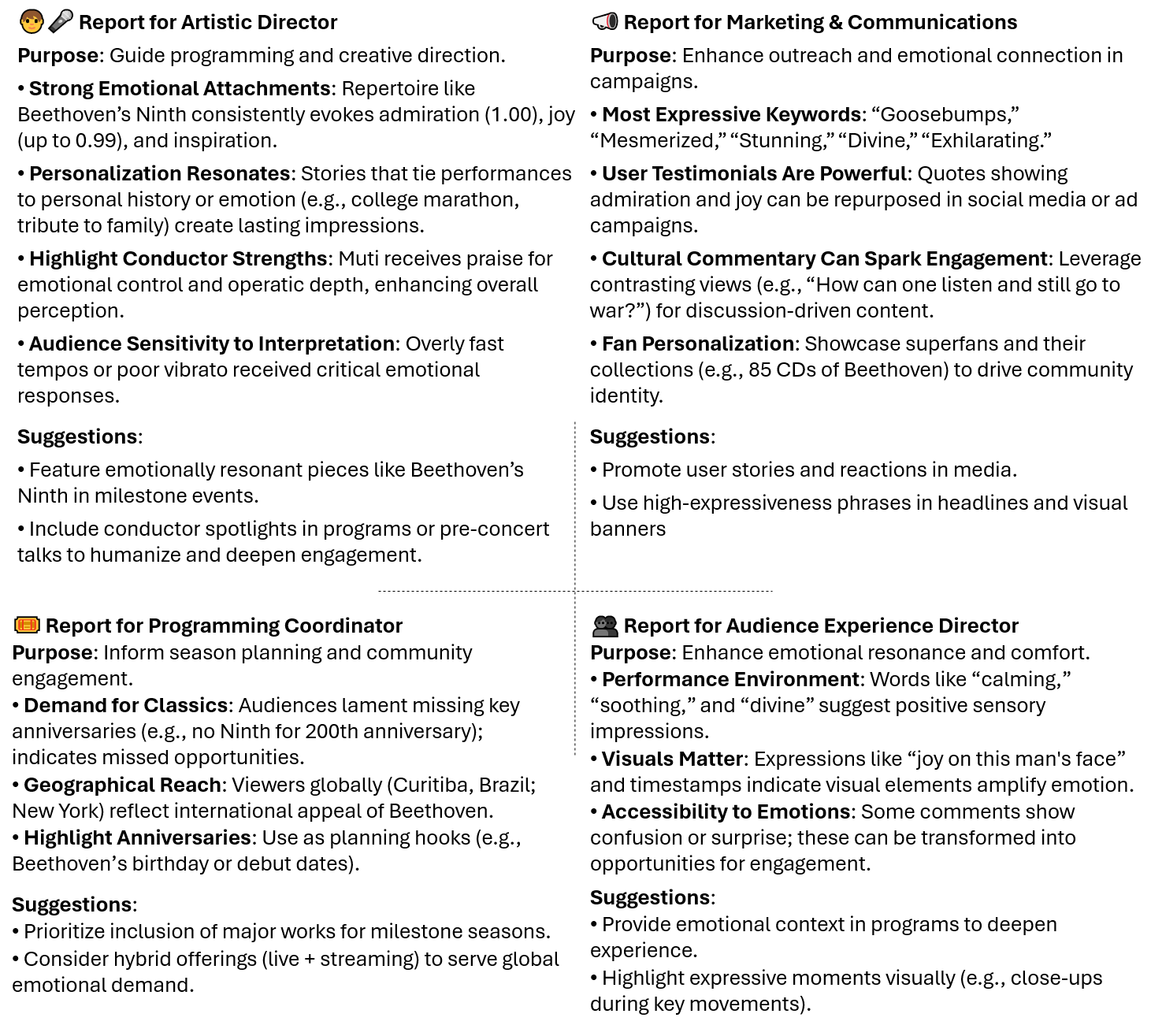

The outcomes of the models, which include sentiments with their expressiveness levels, can be easily integrated into personalized report generation systems to summarize emotion-aspect associations represented in a collection of user comments, producing structured analysis with insights and suggestions for specific roles organizing artistic performances.