+ WHAT?

The mental and emotional strain caused by chaotic urban soundscapes.

+ HOW?

Transforming urban noise into harmonious soundscapes via a biomimetic algorithm and interactive VR that responds to user movement.

The core concept of B:N:S – Biomimetic Sound Network is to transform urban noise pollution into immersive, therapeutic soundscapes through a nature-inspired sound algorithm. Drawing from the principle of biophony—where organisms in natural environments occupy distinct frequency niches—the project reimagines city sounds not as chaotic byproducts, but as opportunities for harmony and interaction. The main challenge addressed is the negative impact of urban soundscapes on mental well-being. By developing an adaptive algorithm that dynamically assigns frequency ranges to symbolic “vehicles” (represented by interactive Orbs), the project seeks to prevent acoustic masking and create a more health-promoting acoustic environment.

The project engages with the urban dimension by reflecting on the future of mobility, digitalization, and sound in hybrid cities. The installation serves as a speculative space that mirrors city life—featuring two phases, “Pollution” and “Symbiosis”—where visitors become agents of change. Through movement and proximity, participants shape the sonic and visual environment, illustrating the potential for citizen agency and responsive public spaces. Ultimately, B:N:S challenges conventional urban planning by proposing an emotionally resonant and biomimetic alternative to managing sound in cities, highlighting how design and technology can foster coexistence, mental resilience, and new modes of urban interaction.

Collaborations

– Impulse Audio Lab (IAL): Co-developed the biomimetic sound algorithm, contributing expertise in developing audio algorithms.

– MPIEA (Max Planck Institute for Empirical Aesthetics): Primary partner for the stress-testing experiment. Hosted the full test setup. The VR environment was custom-built for MPIEA to allow immersive evaluation and data collection.

– External Product Designer Jordi Esteve Pastor: Consulted on the CAD modeling and prototyping of the interactive Orb, translating design concepts into buildable forms.

– ZKM | Zentrum für Kunst und Medien Karlsruhe: Identified as a key exhibition partner. As of today, there has been new movement: contact was re-established by Holger Stenschke (MPIEA), and a response from Alistair Hudson (ZKM) is awaited to finalize planning.

biomimetic sound, urban soundscape, responsive environments, AVAS design, immersive experience, spatial audio, stress-aware systems, smart city sound design, Ambisonics

Immersive Experiments

Biomimetic Sound Algorithm

A core outcome of the project was the development of a biomimetic sound algorithm, inspired by biophony, which assigns adaptive frequency ranges to symbolic urban agents (interactive orbs) in order to transform chaotic urban sound into structured, calming soundscapes. These dynamics shift depending on proximity and interaction, enabling the system to respond fluidly to user behavior.

Format: Custom patch developed in Max/MSP

Description: The algorithm mimics principles of biophony by assigning dynamic frequency “niches” to moving sound sources (interactive orbs), avoiding masking and promoting auditory balance. Input parameters include unique IDs, real-time position, and proximity data. The system switches between two sonic states:

-Pollution: Dissonant, aggressive sound layers reflecting chaotic cityscapes

-Symbiosis: Harmonic, calming sound fields inspired by nature

The algorithm is reactive and runs in real time, controlling multichannel output to an ambisonic speaker layout and headphones (in VR mode).

Demonstrator of Max/MSP tool

VR Testing Environment

A fully functional VR setup was implemented to conduct controlled stress- testing of the installation. This environment replicates the spatial and acoustic experience of the physical setup and is used to evaluate whether the algorithm can demonstrably reduce user stress levels—measured through indicators such as heart rate (HR) and behavioral feedback. A treadmill is integrated to simulate physical movement within confined space.

Format: Built in Unreal Engine, Unrealproject

Hardware: Meta Quest 3 headset + Virtuix Omni VR treadmill

Software: Unreal Engine 5.4.4, SteamVR, Reaper, OculusHub, Omni Core

Description: A fully immersive VR simulation was developed for stress testing the sound environment before physical setup. Users navigate the installation virtually using the Omni treadmill while sound responds to movement and proximity in real time. Heart rate (HR) sensors are integrated to assess psychophysiological stress responses during exposure to the “Pollution” and “Symbiosis” states.

Scenarios / Test / Exhibitions:

At this stage, the project has undergone a first run in a controlled environment at the Max Planck Institute for Empirical Aesthetics (MPIEA). The focus was on validating the technical setup, including the VR environment, the biomimetic sound algorithm, and heart rate tracking as a stress indicator. The test demonstrated system stability and confirmed the feasibility of immersive evaluation through biometric feedback.

Although no public exhibition has taken place yet, planning is in progress—ZKM Karlsruhe has been identified as a potential future venue, with current communication underway. In the future, the system could be applied in interactive installations, urban mobility labs, or public space interventions—as a prototype for responsive sound environments in smart cities. Additionally, integration into AVAS systems for electric vehicles is envisioned, offering a functional application in urban sound design beyond the cultural field.

Lessons learned:

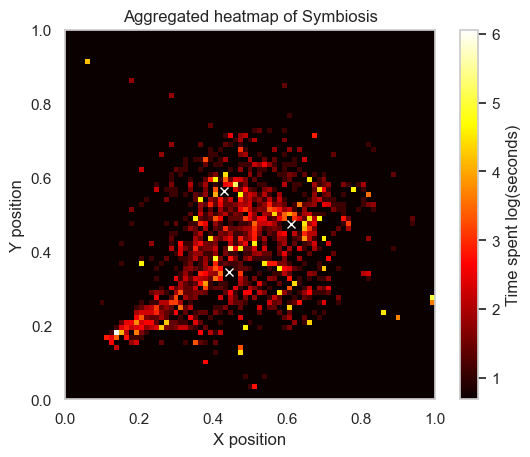

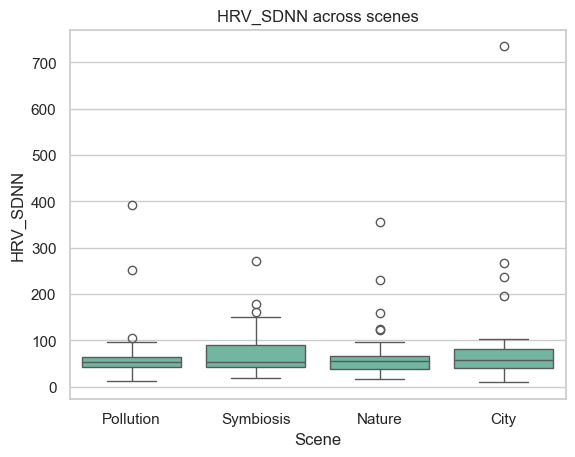

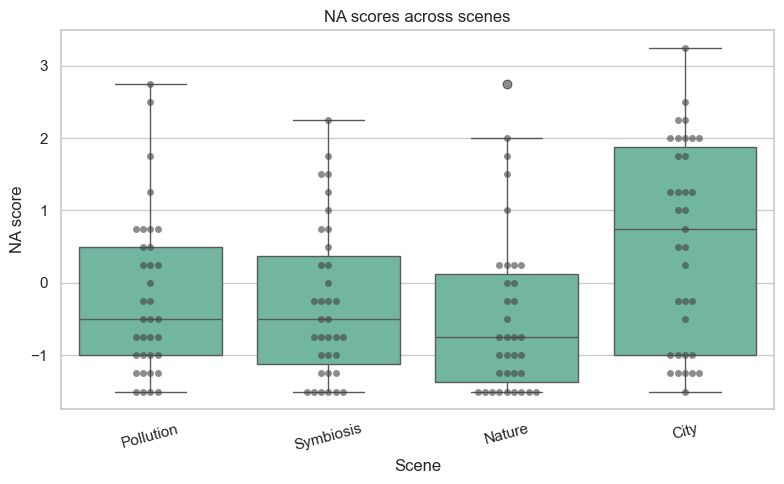

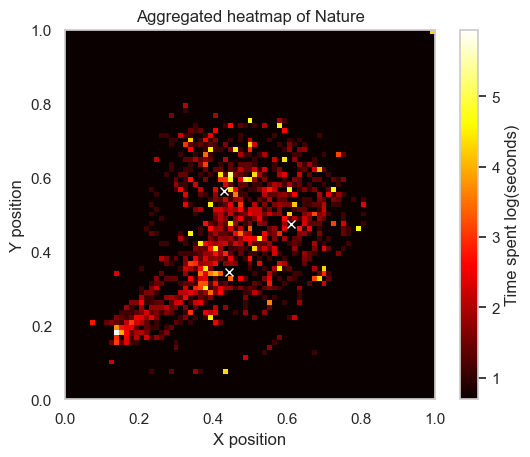

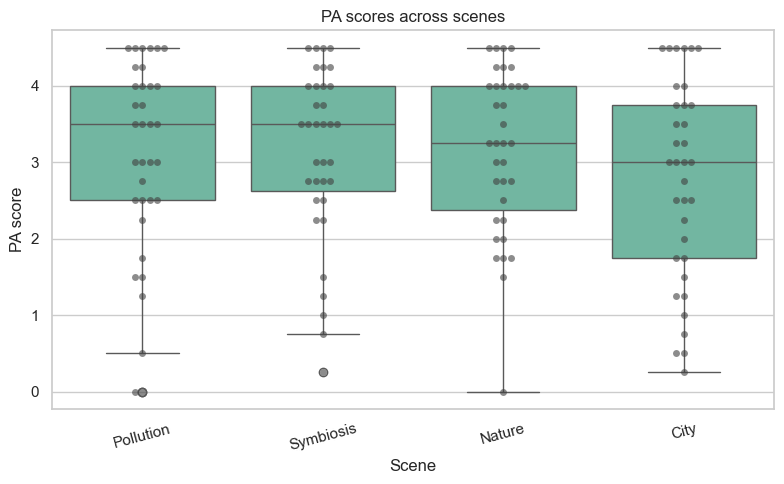

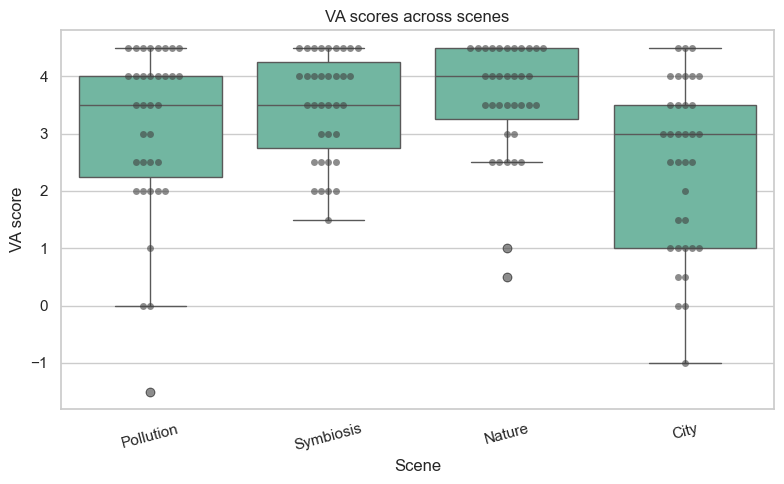

An experiment is currently being conducted at the VR Lab of the MPIEA with 37 participants tested so far (20 female, 17 male), aged between 19 and 57, most of whom were of German origin. Each session lasted approximately one hour. Upon arrival, participants were briefed about the experiment, provided informed consent, and completed a demographic questionnaire along with a questionnaire assessing immersive tendencies and prior experience with VR technology. Participants were then equipped with a heart rate monitoring belt and special shoes for use with the VR treadmill. A baseline heart rate measurement and initial emotional assessment were recorded, followed by a short familiarization phase with the VR equipment (i.e. head-mounted display and treadmill). A second baseline of heart rate and emotional state was then obtained while they experienced the VR environment without any sound. Subsequently, participants experienced four different VR soundscapes, presented in a randomized order. They were instructed to freely explore the virtual environment and attend to the sounds, which were rendered through a 3D audio array of speakers, and identify their preferred listening location. Within the virtual space, three human-like figures located in the center of the room acted as sound sources. These produced the Symbiosis and Pollution soundscapes, based on electric vehicle sounds with and without the algorithm manipulating consonance and dissonance, as well as City and Nature soundscapes. After selecting a preferred location within each scene and informing the experimenter, participants completed an emotional assessment questionnaire. At the conclusion of the experiment, they were invited to provide open feedback about their experience.

The dataset comprises subjective emotion ratings, heart rate data, and movement trajectories. No significant differences in heart rate were observed across soundscapes, likely because the strenuous treadmill activity overshadowed subtle emotion-related effects. Subjective ratings, however, revealed clear distinctions: positive and negative affect differed significantly between all soundscapes and City, with Pollution also eliciting more negative affect than Nature. For valence, City again differed from all others, while Pollution was rated more unpleasant than both Symbiosis and Nature. Analysis of final positions showed that only in the City condition did participants place themselves significantly farther from the sound sources.

Value of the Approach

The project offers a unique contribution to the “New Silence in Urban Spaces” Pilot Use Case by combining artistic experimentation with technological and physiological research. Through the development of a biomimetic sound algorithm and a responsive audio-visual installation, the work reframes urban noise as a curatable, interactive layer—rather than a pollutant. It introduces an emotionally intelligent sonic system that reflects human presence and movement, contributing to mental well-being, public space innovation, and aesthetic reflection on the role of sound in future cities. The work bridges art, mobility, and health within the context of hybrid urban infrastructures.

Adaptability / interoperability:

The methodology is designed to be modular and extensible. The core algorithm can be adapted for use in AVAS systems, interactive architecture, or smart infrastructure, allowing others to build upon it in mobility design, urban planning, or responsive public art. The use of industry-standard platforms (Max/ MSP, Unreal Engine, CAD) ensures interoperability across disciplines. The VR environment and physiological testing setup provide a replicable framework for evaluating human responses to sound design. In this way, the project offers not just a final piece—but a toolset and thinking model for cities, designers, and technologists alike.

Impact

Through cross-disciplinary collaboration and real-time physiological testing, the project translates a visionary concept into a scientifically testable, interactive installation. The combination of immersive VR, algorithmic sound control, and biometric feedback demonstrates the health-promoting potential of sonic design in urban environments. Beyond the artistic context, the biomimetic sound algorithm is designed for real- world scalability. One immediate application is in AVAS (Acoustic Vehicle Alerting Systems) for electric vehicles, offering a nature-inspired alternative to current artificial engine sounds. The algorithm could dynamically adapt to context, speed, and proximity—reducing stress while maintaining safety and regulatory standards.

Looking further ahead, the system has the potential to evolve into a modular design framework for curating the sound of entire smart cities. In this role, it could help orchestrate urban soundscapes across public infrastructure, mobility systems, and architecture—turning cities into emotionally responsive environments that balance functionality with well-being. By transforming noise into an adaptive, participatory medium, the project lays the groundwork for a future in which urban sound is no longer a byproduct, but a designed, intentional layer of experience—from vehicles to streetscapes to entire urban ecosystems.