+ WHO?

University of Genoa (UniGe)

Partner

+ WHAT?

Understanding and measuring how humans express emotion and intention through movement.

+ HOW?

By translating expressive gesture analysis into a modular Python toolkit that connects scientific precision with creative exploration.

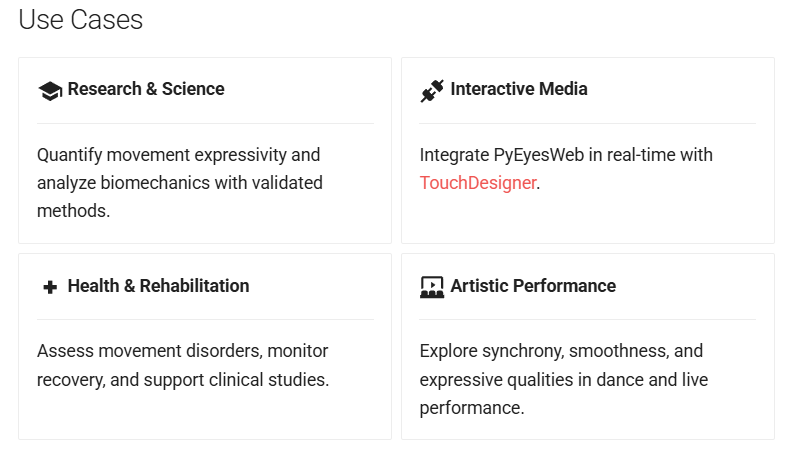

PyEyesWeb is a research toolkit for extracting quantitative features from human movement data. It builds on the Expressive Gesture Analysis library of EyesWeb, bringing expressive movement analysis into Python as a core aim of the project. The library provides computational methods to analyze different qualities of movement, supporting applications in research, health, and the arts. It is designed to facilitate adoption in artificial intelligence and machine learning pipelines, while also enabling seamless integration with creative and interactive platforms such as TouchDesigner, Unity, and Max/MSP.

Developed by InfoMus Lab – Casa Paganini, University of Genoa, with support from the EU ICT STARTS ReSilence Project, the project responds to the challenge of understanding how humans express emotion and intention through movement. By offering accessible computational tools, PyEyesWeb allows researchers, artists, and developers to explore and measure movement qualities such as smoothness, synchrony, and dynamics. Engaging with the urban dimension, the toolkit helps to interpret how bodies move and interact in public and collective spaces—whether in dance, rehabilitation, or responsive installations. PyEyesWeb invites users to rethink the relationship between motion, emotion, and environment within today’s digital cities.

Collaborations

Created by InfoMus Lab, in collaboration with partners of the ReSilence consortium and external artists and technologists who contributed to testing and feedback.

expressive movement, gesture analysis, embodied interaction, computational creativity, motion data, urban performance, AI & arts, multimodal analysis

Design Methods & Frameworks

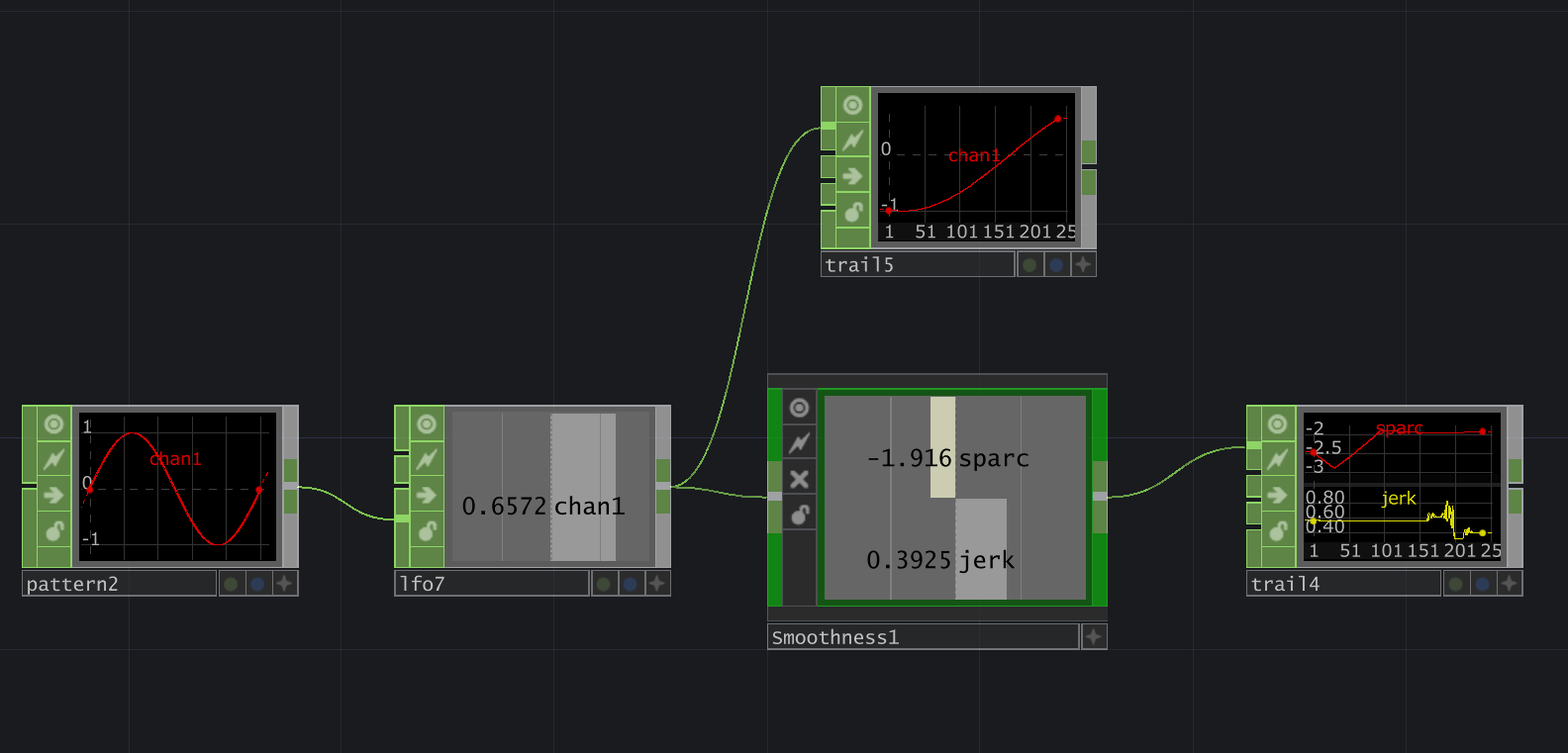

PyEyesWeb builds on InfoMus Lab’s multi-layered conceptual framework for expressive movement, which connects raw physical signals captured from the human body to higher-level expressive qualities such as emotion, style, and intention. Developed as a modular Python library, it provides computational methods to analyze the expressive dimensions of human movement. The toolkit integrates smoothly with artificial intelligence and machine learning workflows and connects directly to creative platforms such as TouchDesigner, Unity, and Max/MSP, enabling both real-time experimentation and offline data analysis.

It is compatible with standard motion capture and sensor datasets (CSV, JSON, TSV), allowing researchers, artists, and technologists to perform quantitative analysis of expressivity and performance dynamics across diverse contexts—from scientific studies to artistic performance and rehabilitation. This methodological foundation, informed by the Expressive Gesture Analysis library of EyesWeb, bridges scientific precision and creative exploration, translating decades of research on movement and emotion into a practical, accessible tool.

Three screenshots showing a demo integrating two PyEyesWeb lib modules in TouchDesigner: real-time measure of Synchronization between two time series, and the PyEyesWeb module measuring Smoothness of a body joint (the modules provides measures using two different algorithms computing Smoothness starting from acceleration and from velocity).

Scenarios / Tests / Exhibitions

PyEyesWeb has been used in interactive performances, research studies, and rehabilitation contexts to analyze and visualize human motion. In artistic settings, it provided real-time feedback to performers by measuring smoothness and synchrony of movement. In healthcare, it helped assess motor control and recovery through expressive metrics. The toolkit’s modular design made it adaptable to diverse environments—from lab experiments to stage performances.

Lessons learned:

Through these applications, PyEyesWeb proved how computational tools can enhance perception and understanding of expressivity. Its flexibility across fields revealed strong potential for both scientific analysis and creative exploration, demonstrating how data-driven insights can coexist with artistic intuition.

PyEyesWeb provides a bridge between human expression and computation, enabling artists, scientists, and clinicians to explore movement as both a creative and analytical language. Within ReSilence, it contributed to understanding how sound and motion intertwine in the experience of urban spaces and live performance.

Adaptability / interoperability:

As an open-source, modular library, PyEyesWeb can be expanded, customized, and integrated with new systems and datasets. Its accessibility encourages collaboration and reuse, inviting others to build upon its tools and apply them across different contexts—from smart city research to interactive art installations.

Impact

PyEyesWeb fosters cross-disciplinary exchange between technology and creativity, supporting new ways to study, visualize, and design expressive movement across art, science, and society.