+ WHAT?

An art-science installation that listens to algal blooms and the shifting relationships they signal between human activity and aquatic ecosystems, asking: “What are we not hearing?”

+ HOW?

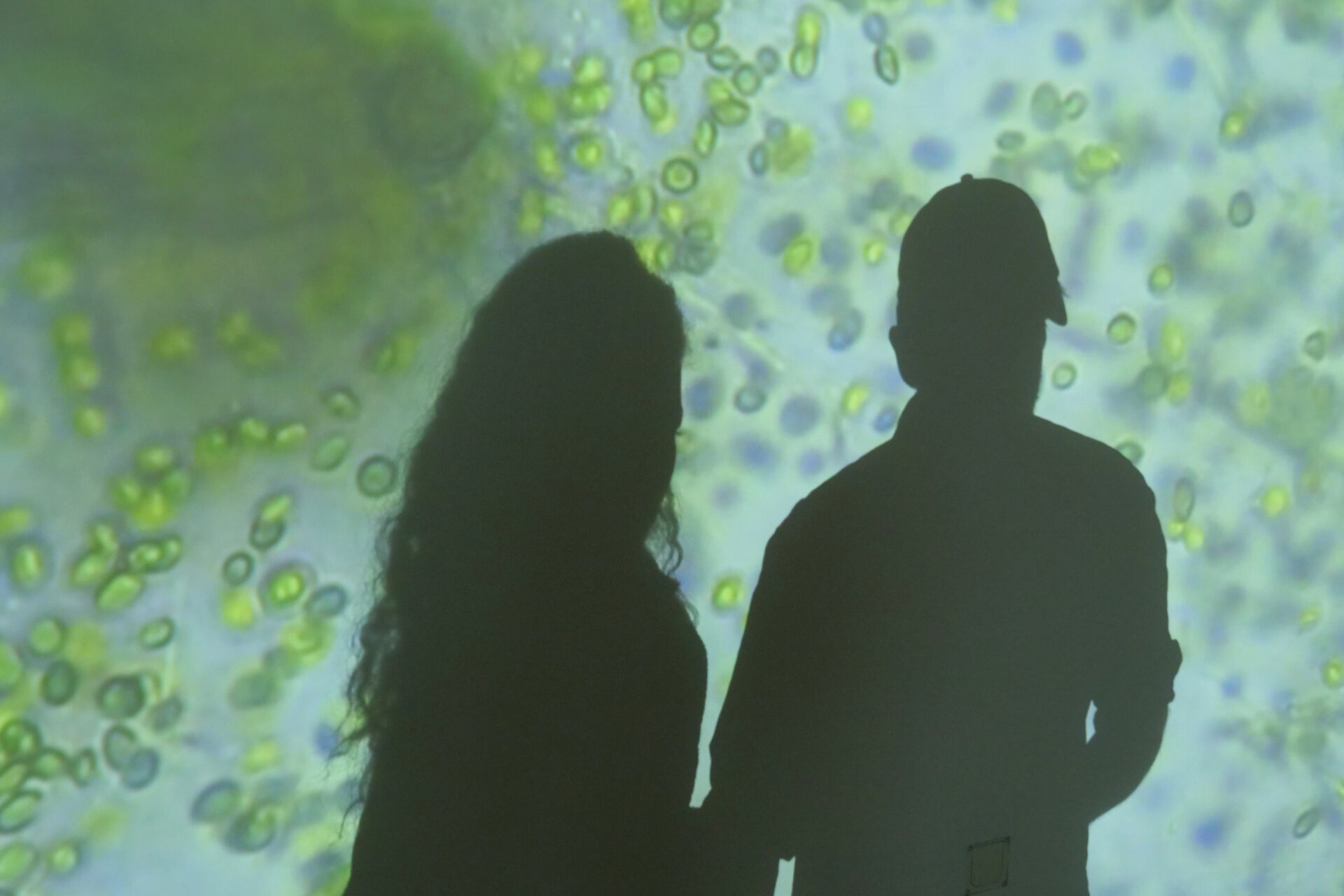

A multi-sensory VR experience with an orchestral soundscape brings the microscopic world of phytoplankton to life, making the invisible audible.

BLOOMS is an art-science installation that attentively listens to the phenomena of algal blooms and the shifting relationships they signal between human activity and aquatic ecosystems, asking: What are we not hearing? Rising temperatures, marine heat waves, and pollution from agriculture, cities, and industry contribute to eutrophication—a process where excess nutrients “feed” the water, creating conditions for blooms to thrive and disrupting aquatic metabolic cycles.

The installation interprets scientific data from satellite imagery and microscopy to illuminate the cosmopolitan algae species found in South East Asia, the Mediterranean, the Baltic Sea, and Brandenburg. Studying field samples from local water bodies, the artists and collaborating scientists also facilitated citizen-science workshops for the public to participate in conversations about the impact of anthropogenic activities on aquatic ecosystems. At its core, a multi-sensory VR experience—accompanied by a carefully designed orchestral soundscape, haptic feedback and immersive visuals—animates the microcosmic view into the life of phytoplankton, making the invisible audible and rendering a complex ecological phenomenon into a tangible, emotional encounter.

BLOOMS is co-created by Wendy Chua, Joyce Koh, and Gustavo Maggio, in collaboration with Players Journey. A project commissioned by the EU S+T+ARTS Artist Residency ReSilence.

Collaborations

The project was a transdisciplinary effort involving key collaborators:

– PlayersJourney UG (Dr. Christian Stein, Valentin Hanau): The SME partner provided the technical expertise for the project, leading the research, design, and development of the VR experience, sensor technology integration, and the production of the final installation.

– Andre Gatto: Media arts and video production

– Tropical Marine Science Institute (Team HABs & Dr. Sandric Leong): These scientific partners provided the critical datasets, microscopy imagery, and expert knowledge on harmful algal blooms that formed the scientific backbone of the artwork.

– Object Space Agency: Collaborated on the co-creation of the final installation.

– Tieranatomisches Theater (TAT) Museum: Museum partner for the inaugural BLOOMS exhibition in Berlin.

immersive experience, art-science collaboration, data sonification, ecological acoustics, VR experience, haptic feedback, climate change, embodied experience

Device-Making Guides

Hardware Setup VR-station:

– 2 x VR Headset (Meta Quest 3)

– 2 x Haptic vest (bHaptics Tactsuit 40x)

– 2 x Gaming PC (UltraGaming R7-7800X3D 32GB; RTX4070TIS 2TB-M2 WiFi W1)

– 2 x Active USB-a extension (deleyCon 7.5m, min. USB 3.0)

– 2 x USB-C to USB-A cable (min. USB 3.0)

– 2 x Infrared Heat Lamp (Philips IR 250R R125 E27 250 Watt)

– 2 x Light bulb socket

– Other equipment: Extension cord, multiple plug, cable ties

Curated Datasets

The project utilized authentic scientific datasets to inform the artistic creation. This included:

– Marine Water Column Data: Sonde data measuring temperature, salinity, chlorophyll, dissolved oxygen and other datasets from a site in the Johor Strait were used to compose the soundscape, translating environmental data fluctuations into musical expressions.

– Satellite Imagery: Data from the European Space Agency’s Copernicus Sentinel satellite was used to visualise the vast scale of algal blooms from a planetary perspective.

– Microscopy Data: Microscopic imagery of cosmopolitan algae species found in various global and local water bodies, including Southeast Asia, the Baltic Sea, and Berlin’s Brandenburg region, formed the basis for the VR visuals and mixed-media installations.

Design Methods & Frameworks

Our core methodology was a deeply integrated art-science collaboration. The creative framework involved:

– Data Sonification & Visualisation: Translating raw scientific data into an orchestral composition and immersive VR world. For example, varied string techniques like col legno battuto were used to evoke the geometric shapes of diatoms, while densely layered orchestration represented their exponential proliferation.

– Embodied Experience Design: A process focused on moving beyond passive observation to active, full-bodied participation. By orchestrating VR visuals, spatialized sound, and haptic vest vibrations, we created an embodied awareness of an otherwise invisible ecological process.

– Generative AI: In one iteration, microscopy imagery of microplastics and microalgae was transformed with Generative AI tools into a short film narrative. The speculative imagery tells the tale of toxic biofilms thriving on ocean microplastics, which act as vectors for the algal species to thrive and travel to new water bodies, visualizing a potential future threat.

Immersive Experiments

The BLOOMS VR experiment was conceived as a deep sensory immersion into the hidden life of phytoplankton blooms. Visitors begin at the ocean surface before being gently “shrunk” to the scale of phytoplankton and zooplankton to witness the bloom of algal species that disrupts aquatic ecologies. The immersion is powered by layered sound design and embodied sensation:

– Surround loudspeakers & subwoofers broadcast low-frequency movements and currents, enveloping the exhibition hall with the physical presence of the ocean.

– VR headset audio carries the intimate “voices” of individual plankton species, each instrument or timbre representing a lifeform within the bloom.

– Haptic vests translate currents and pulses into tactile vibrations on the body, making water movement physically tangible.

– For the opening, live musicians added a performative dimension, interacting with the spatialized soundscape to amplify the sense of encountering living agents.

This orchestration of sound, touch, and vision transformed the scientific phenomenon of eutrophication into a visceral, emotional experience. Instead of reading data, participants felt the ocean’s metabolic processes, gaining an embodied interest and understanding of the precarious balance between nutrient flows, warming seas, and algal proliferation.

Reflections

– The polyphonic soundscape—species “voices” converging into a bloom—was crucial for evoking empathy with non-human lifeforms.

– Emotional responses (wonder, unease, curiosity) were often stronger triggers for understanding the scientific narrative than verbal explanations alone.

– The combination of VR, sound layers, and haptics generated a memorable embodied awareness of how human activity drives invisible ecological tipping points.

Scenarios / Tests / Exhibitions:

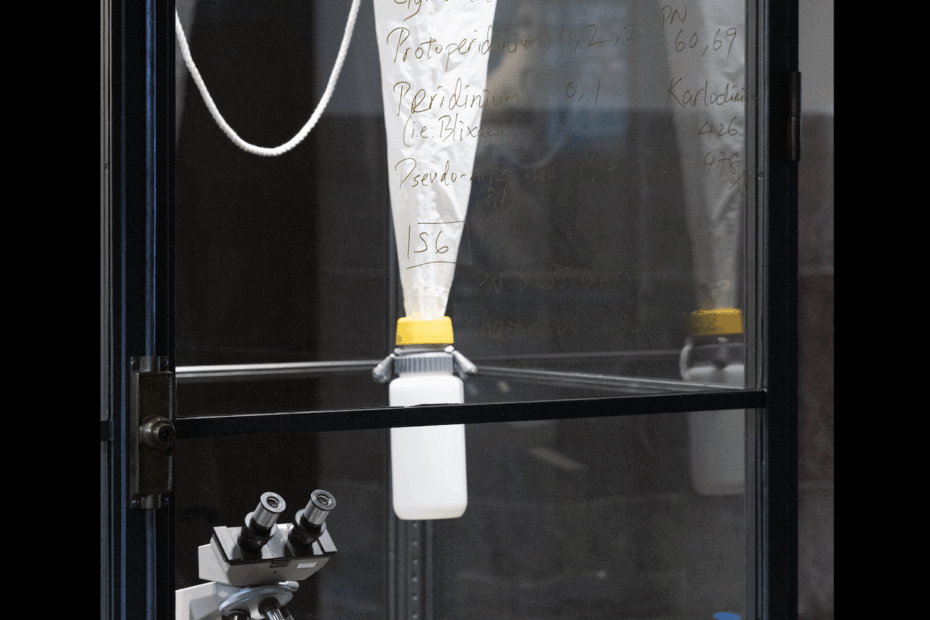

The primary application was the BLOOMS art-science installation, exhibited at the Tieranatomisches Theater (TAT) Museum in Berlin from May 7 to June 30, 2025. The exhibition was designed as a journey through the phenomenon of algal blooms. Visitors first encountered vitrines displaying the tools of scientists (plankton nets, multi-parameter sondes) and contextual information. The centerpiece was the immersive VR experience situated in the museum’s rotunda, where participants donned VR headsets and haptic vests to “dive” into the plankton microcosm. The experience was augmented by large-scale projections and a multi-channel sound system that filled the space.

A key component was a Citizen Science Workshop where participants explored field samples from Berlin waterways, using microscopy to identify local phytoplankton and learn about cosmopolitan toxic species. The artists, along with collaborating scientists from Leibniz-Institut für Gewässerökologie und Binnenfischerei (IGB) Berlin and the Tropical Marine Science Institute of Singapore, facilitated a discussion with the participants on current algal bloom monitoring and mitigation strategies and how the public can be involved in citizen science survey of urban lakes and waterways.

Lessons learned:

The exhibition served as a public testbed for the effectiveness of multi-sensory art in communicating complex science. An art perception survey was conducted to evaluate how the immersive environment influenced the participants’ embodied experience and their ability to connect with a non-human perspective. While the full data is still under analysis, initial observations indicate that the layering of haptics, orchestral sound, and VR visuals was highly effective in triggering strong emotional responses and fostering a memorable understanding of the scientific narrative behind algal blooms.

All photos and videos found in this drive: EXH PHOTOS & VIDEOS

Artistic, Social, Technological, and Research-based contributions:

– Research: It serves as a compelling case study in art-science collaboration, showing how artistic methodologies can provide new, empathetic pathways to understanding complex scientific phenomena like eutrophication and climate change.

– Artistic: The project contributes a new model for data-driven art, transforming scientific datasets into a rich, orchestral, and haptic composition that tells an ecological story.

– Social: BLOOMS raises public awareness of a critical but often invisible environmental issue that links land-based urban activity to the sea. The citizen science workshops empowered the public with tools for ecological observation, fostering community engagement and environmental stewardship.

– Technological: It demonstrates a sophisticated integration of VR, haptic feedback, and spatial audio to create a scientifically-grounded, embodied experience, pushing the boundaries of immersive storytelling for science communication.

Adaptability / interoperability:

The project’s framework is highly adaptable. The methodology of translating local environmental data into an immersive art experience could be applied to different ecological challenges (e.g., air quality, soil health) and geographic locations. The modular technical setup (VR hardware, haptic vests, sound design) can be scaled to fit various venues, from museums and galleries to science centres and educational institutions. Furthermore, the citizen science workshop component is a replicable model for public engagement that can be integrated with similar exhibitions worldwide.

Impact

The combination of these tools and collaborations successfully addressed the project’s core challenge. By translating abstract scientific data into a multi-sensory, immersive experience, the project made the invisible ecological crisis of eutrophication tangible and emotionally resonant. The VR experiment allowed participants to gain an “embodied awareness” of a more-than-human perspective, fostering a deeper understanding and empathy that data sheets alone cannot provide. The collaborative methodology serves as a powerful model for how art and science can converge to communicate complex environmental issues to the public.