+ WHAT?

Designing awareness environments that respond to neurodivergent sensory experiences and foster emotional well-being.

+ HOW?

Creating immersive, sensor-responsive installations that translate embodied experiences into inclusive spatial design insights.

“Echoes” is an immersive environment designed to raise awareness and foster acceptance of neurodivergent spatial experiences, creating meaningful connections between art, technology, and human perception. For autistic individuals, sensory input profoundly shapes how they interact with the world, making physical environments critical to their well-being. Spatial access remains consequential to agency, empowerment, and self-possession. “Echoes” invites participants to engage their own bodies and senses to empathize with the emotional experiences of others. It is a safe space with responsive light and sound events that support self-regulation; a site of bodily and perceptual affirmation and awareness. The work highlights the capacity of built environments to influence embodied, multi-sensory experiences, their potential for self-regulation, and their impact—particularly on neurodivergent and autistic individuals, but ultimately on everyone. The “Echoes” installation aims to improve experience quality for ND/ASD individuals with a high level of sensory engagement.

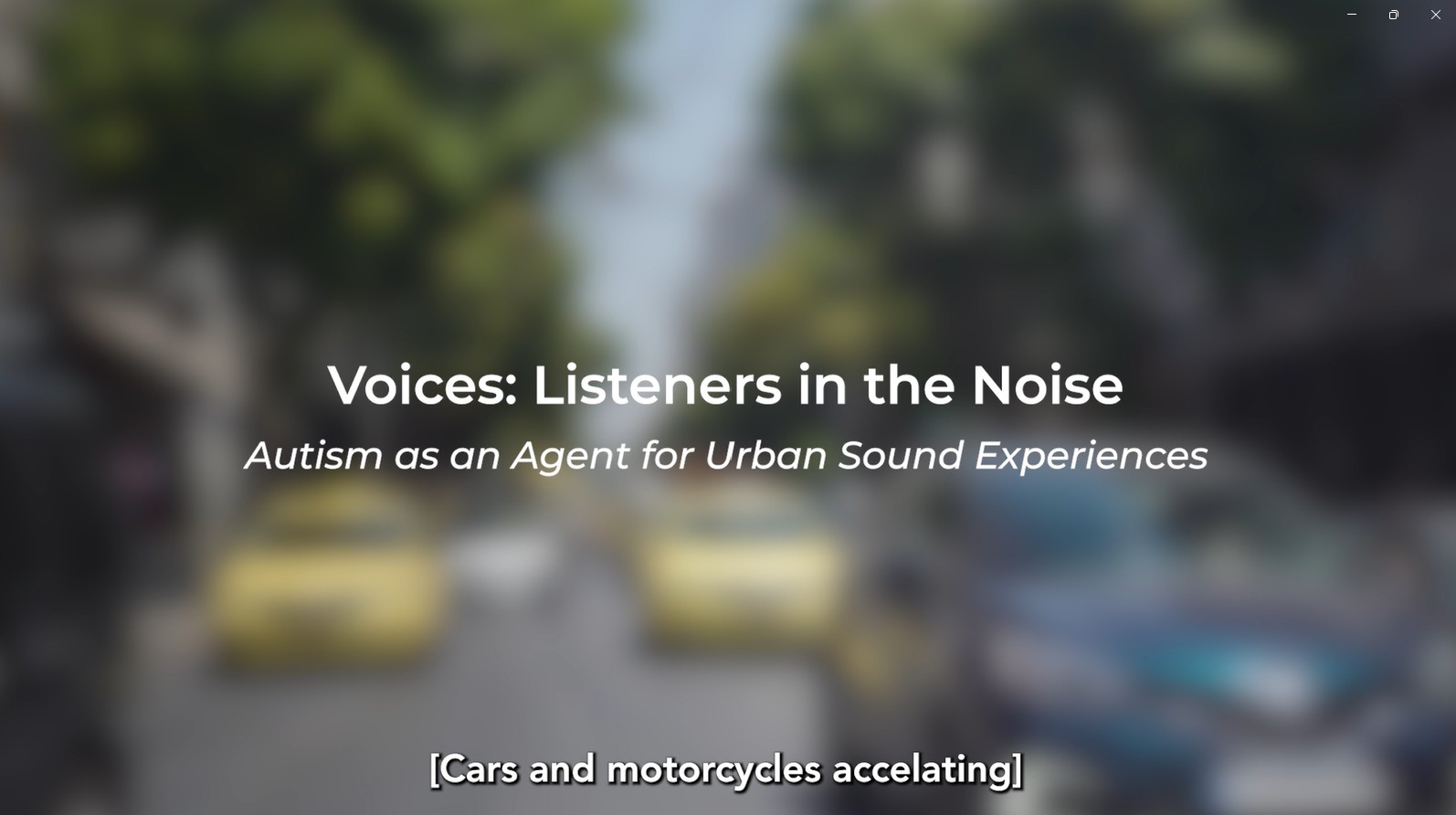

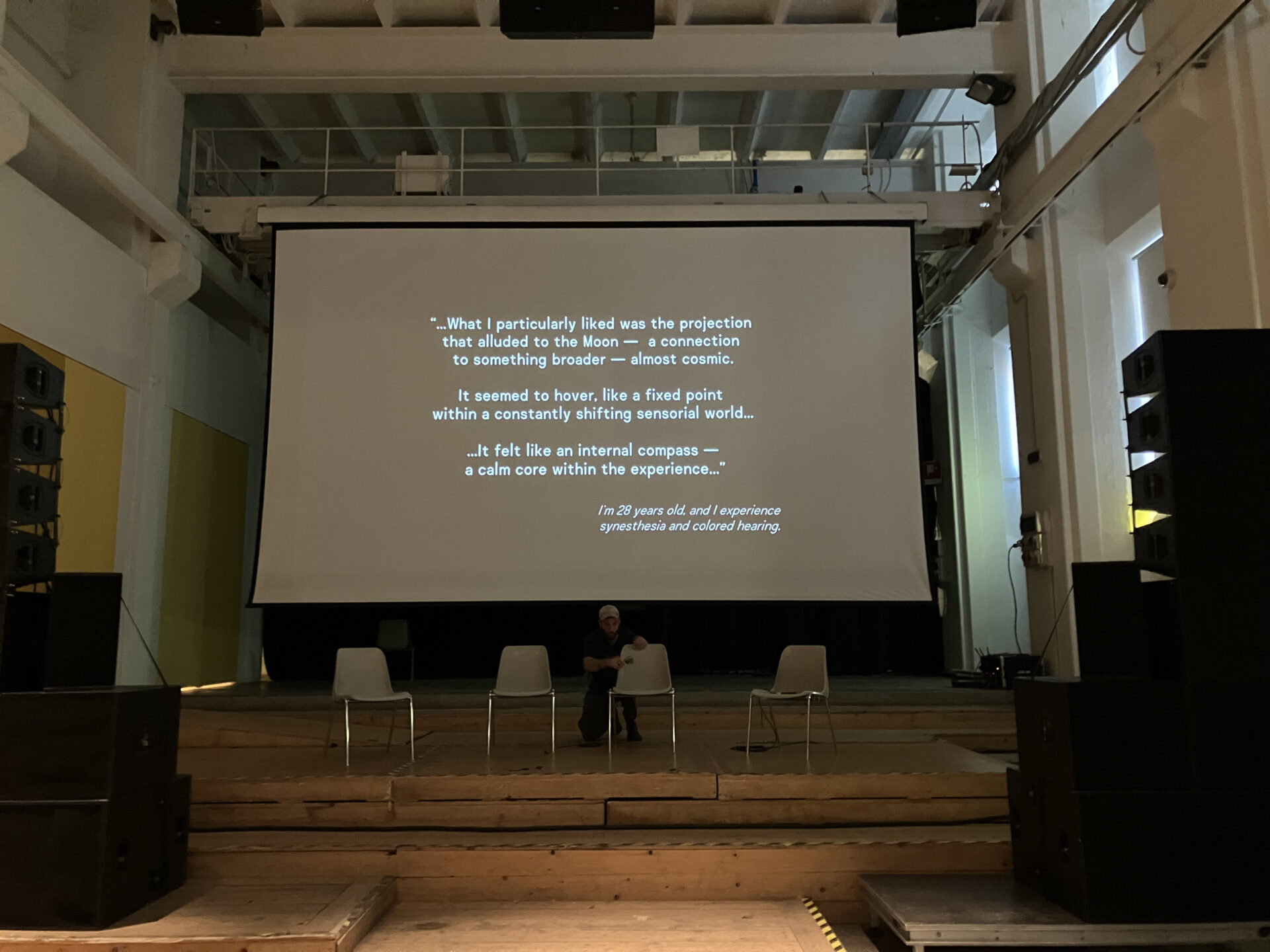

Complementing the physical experience of the installation, the “Voices” film documentary shares first-hand narratives that reveal how urban soundscapes affect autistic individuals, raising awareness and inspiring more inclusive cities. The interviews’ transcripts result in a research project that advances knowledge on how ASD/ND individuals experience urban environments and how collaborations between neurodivergent/neurotypical individuals/communities and art/design/technology/healthcare professionals can create autism-friendly spaces. The information compiled by the “Echoes” exhibition visitors’ voluntary and the interview participants’ coding aims to offer insights for designers, planners, and policymakers, and contribute actionable research on this critical topic.

Collaborations

Technology Collaborators:

– Eleftheria Lagiokapa & Paraskevi Kritopoulou, CERTH

– Ilias Kalisperakis & Spyros Stefanidis, Up2Metric

Research Collaborators for “Voices”:

– Rosemary (Rosie) Frasso, Victor Heiser, MD Professor Program Director, Public Health; Director, Mixed Methods Research, Asano-Gonella Center for Medical Education & Health Care, Sidney Kimmel Medical College

– Quinn Plunkett, MPH, Global Health Strategy & Research, Driving Policy & Impact Across UN, Academic & NGO Sectors

Community Collaborators, ASD Self-Advocates

– Rachel Updegrove, Adjunct Faculty, Thomas Jefferson University; Laboratory Planner, HERA Laboratory Planners, Autistic Self-Advocate.

– Wendy Ross, Inaugural Director, Jefferson Center for Autism & Neurodiversity

– Triantafylenia Kosmidou

Autistic Individuals who participated in interviews that informed the research of the work and shaped the “Voices” Documentary.

– Jeron Cole, Binech Hernandez-Lorenzo, Stuart Neilson, Shardai Robinson, Chloe Rothschild, Emma Russek, Fox Ryker, Misha Samorodin, Ev Smith, Rachel Updegrove, Nae Vallejo, Max Van Kooy, Adam Wolfond

Videos Montage:

– Elpida Nikou

embodied experience, sonic awareness in urban soundscapes, community engagement, responsive environments, neurodiversity, sonic architecture, AI-generated sound, real-time space adaptation, biometric recordings for space sonification

Interactive Modules & Web Tools

A suite of interactive digital components was developed to enable real-time tracking, visualization, audio generation, and user interaction within the physical installation environment. The system integrates web-based interfaces, computer vision, mmWave radar listeners, and physical devices into a cohesive, multimodal interactive experience.

Physical Space Architecture and Equipment

“Echoes” is a physical space with dimensions of approximately 4,5 meters curvilinear base, and 6 meters in height, constructed with eco-friendly materials. It is a parametrically designed structure made of interlocking 15mm wood pieces cut using CNC precision and coded for easy assembly. These interlocked pieces shape 40 frames of various shapes and dimensions that are mounted next to and on top of each other. The structure is designed for rapid and efficient assembly and disassembly, and with transportability in mind for deployment in various contexts. The frames are clad with two 8 mm-thick layers of cork that seamlessly mold to the complex curvatures of the frames. The cork layers also provide acoustical protection and contribute to the desired sound reverberation. The inner surfaces are dressed with cork fabric for tactile considerations and visual continuity. The top part of the installation creates a protective cap that also serves as the carrier of all equipment, hidden from the visitor’s eye. The bottom surface of this cap is white to reflect the dynamic lights that are projected by a short-throw projector mounted on it. The projector projects dynamic light events on a 1.60 meters milky half-spherical plexiglass with a 160 cm diameter that, from the interior, is perceived as a dome. This surface is positioned 1.20m below the cap and approximately 4.50m above the ground.

Autistic individuals frequently seek smaller, sensory-friendly spaces to help them self-regulate. The installation’s curvilinear, nature-inspired geometry creates a smooth transition entry zone from a larger space to an intimate curvilinear interior. Its geometric composition, material, light, acoustics, and overall detailing follow evidence-based research to provide a personalized, sensory-friendly, and soothing experience. The installation is organized in three interconnected environments: In “outside: a collective experience,” participants engage with “Echoes” while they approach it from an exposed environment initially through its totem-like scale and playful geometry, and also with a series of light events that emerge from within and change slowly and dynamically in light intensity and coloration. This infrastructure acts somewhat as a faro/lighthouse that invites people closer. Participants then enter its intimate, interior space that functions as a “retreat” for an individual, dynamically adjusting the light and its sonic qualities based on their movement and breathing rhythms. Movements like rocking, vocalizing, or fidgeting are often misunderstood—but they’re essential forms of self-expression. Participants are encouraged to interact with a dynamic projection, “the self-reflecting moon,” that echoes their presence, encouraging self-awareness and playfulness, as a soothing process.

In terms of equipment, the installation space is equipped with three RealSense d435i cameras: one overhead for position tracking and two at slightly above eye level for detectors that need a full body view (skeleton tracking, face detection, etc.). A mini-PC is mounted on top of the structure, hidden from visitors, alongside a custom PCB designed as an Arduino shield for controlling other physical devices (like LED strips, lights, fans). The setup also includes the downward-facing short-throw projector, power supplies for all components, and the necessary cabling. Along the curvilinear base of the structure, there are LED strips to illuminate the space. Additional equipment supporting audio generation includes two FMCW mmWave radar sensors, positioned at an angle of approximately 105 degrees to each other, to ensure full coverage of the chamber area. The sensors are mounted at a height that aligns as closely as possible with the torso level across a range of human heights. The setup is completed with two speakers mounted on the top ring of the structure for minimal visual obstruction. All cameras and sensors that exist within the space, mounted on its walls, are encased for minimal visibility in 3d printed components designed with a geometric synergy to the overall structure and with materiality and texture that addresses tactile sensory needs. Together, these form the core of the room’s interactive setup in precise coordination with the physical aspects and geometric characteristics of the structure.

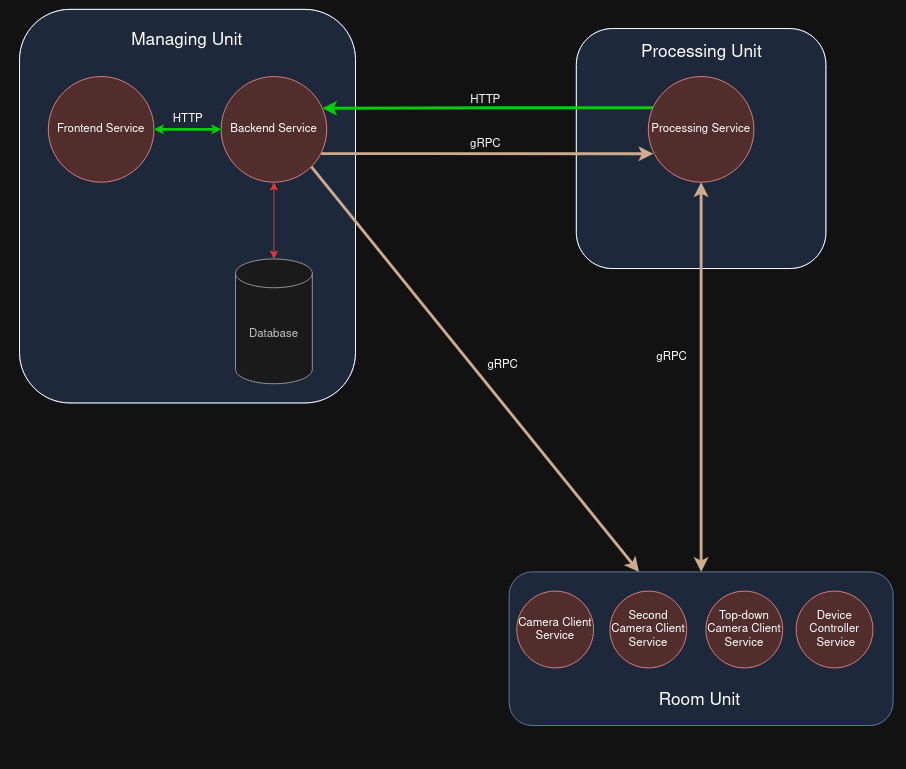

Application Architecture

The application is built using Python, React, and C (for the Arduino programming). The system is organized into three software units as Docker compositions for easy deployment and reproducibility during development. The separation of software units was chosen to provide the ability to deploy different parts of the application remotely to address the high computational demands of computer vision algorithms. During development, it was determined that the added latency of remote computation was unacceptable to the real-time demands of the experience, so all units were deployed locally on the same machine. The three software units of the system are:

– Management Unit: It includes the backend (FastAPI + SQLAlchemy) and frontend (React UI).

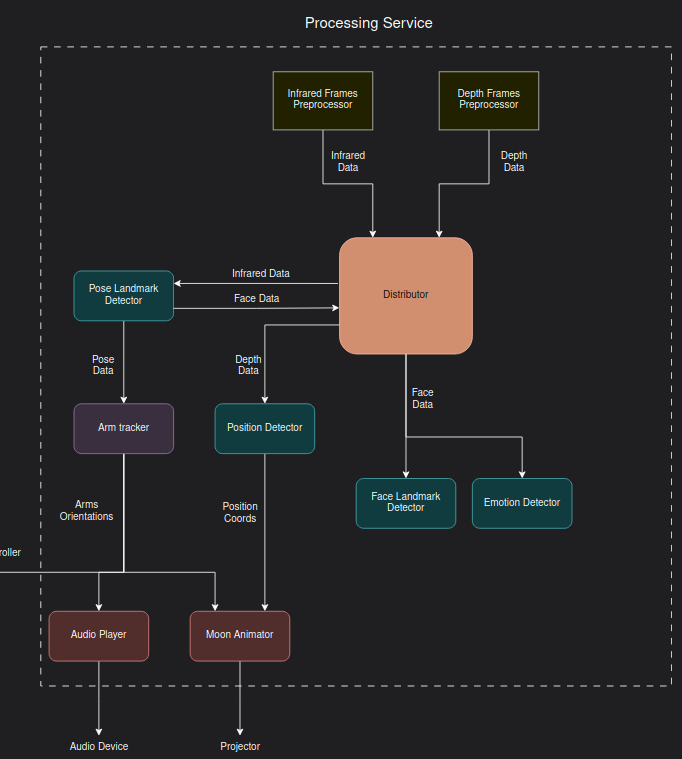

– Processing Unit: It receives input from different sources (camera, mmWave sensors) and distributes it to the appropriate detection modules depending on the source. These, in turn, forward their outputs to other modules that translate the detections to the interactive media or logic of the room (moon animation, audio, lights)

– Room Unit: Composition of processes that interface with the physical installation. They handle capturing inputs from cameras and mmWave sensors to forward to the processing unit using gRPC streaming. They also handle the outputs of the processing unit, which they forward to the projector, LED strips, and other interactive devices.

Sensing & Detection Modules

Multiple Python-based detectors were implemented using custom or third-party tools. All detectors are working in a maximum throughput state in order to deal with the real-time nature of the application. For detectors that work based on the eye-level camera’s feed, they are always dual detectors, one for each eye-level camera, to increase coverage and accuracy. The detectors have a set of customizable settings that can be tweaked from the application UI.

– Skeleton Pose Tracking: Estimates human body posture by detecting and connecting key skeletal landmarks in real time. Implemented using the pose landmarking module of Google Mediapipe which uses a variant of the BlazePose model for 3D pose estimation. Pose Tracking Video

– Face Tracking: From the previous pose tracking solution, a custom method was implemented where, by using the position of different pose landmarks, it is possible to determine a bounding box for the position of the face on the image without performing face detection, which achieves high accuracy with minimal extra computation cost. The cropped face can then be provided to the modules that perform face detections.

– Face Landmark Tracking: Detects face landmarks (like mouth, eye, brow, nose etc.) and blendshapes that can be used to recognize facial expressions. Implemented using the face landmarking module of Google Mediapipe, which uses BlazeFace, a face mesh model, and a blendshape model for the whole detection pipeline.

Face Blendshapes Video

– Sentiment Analysis: A pre-trained open-source Hugging Face model was used for face sentiment analysis, after experimenting with a few to identify the one that produces the best results in the setting of the application.

Sentiment Detector Video

– Position Tracking: For position tracking, a custom solution was implemented using the OpenCV library with an overhead depth camera. The adopted approach uses the depth frame from the camera above, applies a distance cutoff to remove the floor from the depth data, and then applies a mask to hide parts of the room (like walls) that are static in the frame (since the shape of the room is known). That way, when someone enters the room, they are the only tall enough object to appear in the depth data, and after applying the filtering methods, clustering can be performed to obtain the shape of the object and determine the position of the person in the room.

– Arm Tracking: The arm tracking module calculates the orientation of the arms. It combines the pose estimation from both detectors for higher accuracy and translates them to a set global coordinate system. Arm Tracking Video

– Vital signs tracking: The Texas Instruments mmWave SDK 3.5 was used to flash the IWR6843ISK sensors for vital signs monitoring. Vital signs data is transmitted via a USB-to-UART bridge. A dedicated listener was developed to parse and process the relevant messages related to the application. Specifically, each mmWave sensor captures the user’s breathing rate along with their position with respect to the mmWave sensor’s antenna. The data received from both sensors are evaluated and post-processed, to assess user activity, distinguishing between stationary and moving states. Based on this assessment, it applies different methods to estimate the breathing rate, ensuring context-aware measurements.

Other Modules

– Presence Tracking: The intended experience is for participants to walk into the room and begin the experience automatically without taking any particular actions. To achieve this, a presence tracker was implemented that uses the detections from other modules to gauge the confidence that a person is in the room. The detections used are from the position tracker and two skeleton trackers (one for each eye-level camera) to increase robustness. A patience factor is also used to mitigate false positive/negative detections and avoid starting or ending sessions prematurely.

– Distributor Module: To deal with the computational demands of the application, every highly computational module was implemented as a separate process (Python multi-processing). Since large amounts of data (multiple image frames per second) are sent between different processes, a custom distributor was implemented that uses pipes for maximum throughput.

Interactive Media & Room Effects

Detector outputs drive interactive experiences in the installation space:

– Moon Animation: This module is in charge of rendering a “moon” animation and modeling its behavior. The rendering of the moon animation written in OpenGL. The logic of the behaviour is modeled using data from detector modules, like position and velocity, and it controls variables like rotation, phase, color, and brightness to achieve an interactive nature to participant actions in the room or the lack thereof. The behavior logic is implemented in Python.

– Interactive Devices: A PCB was designed and printed as an Arduino shield to expand the limitations of an Arduino in controlling multiple devices, both in numbers and in power demands. The microcontroller is used as an interface between the application and the devices placed around the structure. It is capable of guiding up to 5 analog LED channels and switching on and off up to 56 devices (lights/fans). It is currently controlling a 2-channel warm white, cool white LED strip. The light/fan devices are not being used in the current phase of the project, but the software and the hardware developed are able to support them for a future application.

PCB on top of the installation

Audio production: This module dynamically transforms live data on breathing rate and presence, calculated during vital signs tracking, into an immersive three-layered sound environment. The audio evolves in real-time based on the fluctuations in the data, creating an immersive auditory experience. It generates and manipulates multiple sound layers—ambient ocean sounds, bird calls, and wave samples—where each layer responds to variations in the data. The module processes incoming data, adjusting sound playback parameters like volume, layering, and transitions dynamically. It records the resulting audio output in real time, creating an evolving sonic landscape that reflects participant physiological states and environmental interactions.

– Backend Infrastructure

A FastAPI + SQLAlchemy backend acts as the bridge between the processing unit, UI, and database, handling real-time session data saving, communicating configuration changes, and serving recorded session data.

– Frontend Session Management & Configuration UI

A browser-based user interface built with React provides session management and configuration tools. This enables operators to initiate, monitor sessions, download past session data, and change settings of the application. It also provides tools to calibrate the devices.

– Live Feed Visualization UI

A lightweight HTML interface paired with a FastAPI streaming server delivers real-time camera feeds from the detector modules. Initially designed for development, this component is also suitable for production monitoring.

– Data Handling

Anonymous data for each session is stored, enabling longitudinal study of user interaction while respecting privacy.

Availability: The application is available locally as it is as containerized services (via Docker) and can be accessed on a browser with a computer on site.

Scenarios / Tests / Exhibitions:

Exhibition of work to Invited Public, Feedback sessions with Focus Groups, and Presentations

– On Friday, July 4th, and Wednesday, July 9th, 2025, the “Echoes” installation was presented to guests on the Autism Spectrum, their therapists, and family caregivers (approximately 30 people). The events took place at the municipal gymnasium Neo Ikonio in Perama, Greece, a space provided to the artists for the construction of the work by the Perama City Hall. The creators received feedback that contributes to their research through on-site discussions, observation, and a qualitative questionnaire they developed regarding the impact of the overall experience, including specific spatial aspects of sound and light projections adapted in real-time based on the participant’s body movement, facial expressions, and respiratory rates within the Echoes’ interior. Find some responses to the questionnaire here.

– On June 24th, the work was presented at the European Cultural Centre (ECC) – Palazzo Michiel as part of the 2025 Venice Biennial. Presentation title: environMENTAL: Frameworks for sensory Multimodal spaces and NeuroInclusion.

– In May 2025, the progress of “Echoes” was presented at the SCUP Mid Atlantic Regional Conference 2025. “Planning for a Changing Future: Learning + Community + Impact.” Session theme: Methodologies centering neurodivergence in campus planning and design. https://www.scup.org/conferences-programs/mid-atlantic-2025-regional-conference/#program

– October 22nd, 2025. Grand Round in psychiatry – a weekly educational session for the department of psychiatry and human behavior at Thomas Jefferson University. 84 phycologist and psychiatry professionals in attendance.

– October 27th, 2025, equity roundtable – NeuroInclusive Design Strategies at SOM Skidmore, Owings & Merrill.

– November 7th, Tyler School of Architecture, Temple University, Presentation of aspects of the work with lecture title: Recursive Worlds: The Design-Science Paradigm.

Interviews

During the development phase, in-person and online interviews were conducted with 13 autistic individuals who responded positively to an open call for collaboration. These interviews are featured in the “Voices” short documentary film. Link of the trailer and request for screening here.

Development phase experiments

Several in-house experiments were performed to improve, test, and identify limitations of the tools and structural complexities of the physical space:

– Sensor testing for input data capture

– Detection accuracy

– Data throughput testing

– Real-time computation performance

– Light conditions performance impact

– Session runs by simulating conditions of an on-site setting

– Interactive media design choices

– Testing of the projection surface’s geometry and dimensions in relation to projector’s location and throw distance specifications

– Iterative testing of geometry, assembly process, and structural performance of the physical space

– Iterative testing of acoustic qualities

– Testing and accuracy of the sensors’ 3d printed modules

Experiments for heart rate tracking, audio generation

– Accuracy evaluation through cross-referencing with breathing rate data from the EmotiBit wearable sensor

– Sensor placement testing at various heights to optimize breathing rate detection across a range of user statures

– Real-world simulation sessions to assess and adjust the audio output based on breathing rate measurements for environmental feedback.

– Evaluation of the aesthetic quality of the audio output and its impact on user stress levels, including whether the sound calmed or increased stress.

Lessons learned:

– Emotional Impact: Most participants described feelings of calmness, introspection, and emotional resonance. One participant mentioned melancholy due to external factors, but found hope through the music.

– Light Interaction: Some participants noticed the dynamic light changes, while others only realized it after being informed. The moon projection and external lighting were particularly appreciated.

– Sound Experience: Sounds like cicadas, sea waves, wind through trees, and heartbeat pulses were mentioned. These contributed to a soothing atmosphere, though abrupt sound transitions were noted as slightly disruptive.

– Participant Suggestions:

– More multi-sensory engagement (e.g., tactile stimuli).

– Greater light interaction at body level (not just the dome).

– Smoother sound transitions for immersive continuity.

– Potential applications in schools, hospitals, and public spaces.

– Neurodivergence: Some respondents shared personal or caregiving experiences with autism or synesthesia, highlighting the installation’s potential for empathy and therapeutic impact.

Within the “Echoes” installation, sensor-based technologies were employed, capable of distant-to-the-body respiratory and movement readings. For the associated research that informs “Echoes” and the resulting documentary “Voices”, the methods and steps employed include:

– the active collaboration with ASD advocates in the conceptualization of the process that resulted in the preparation of visual stories before the interviews to support the ASD interviewees,

– the iterative design for the open call flyer that addressed inclusive graphic aspects and language, and

– the application and IRB approval before conducting the interviews, to name a few.

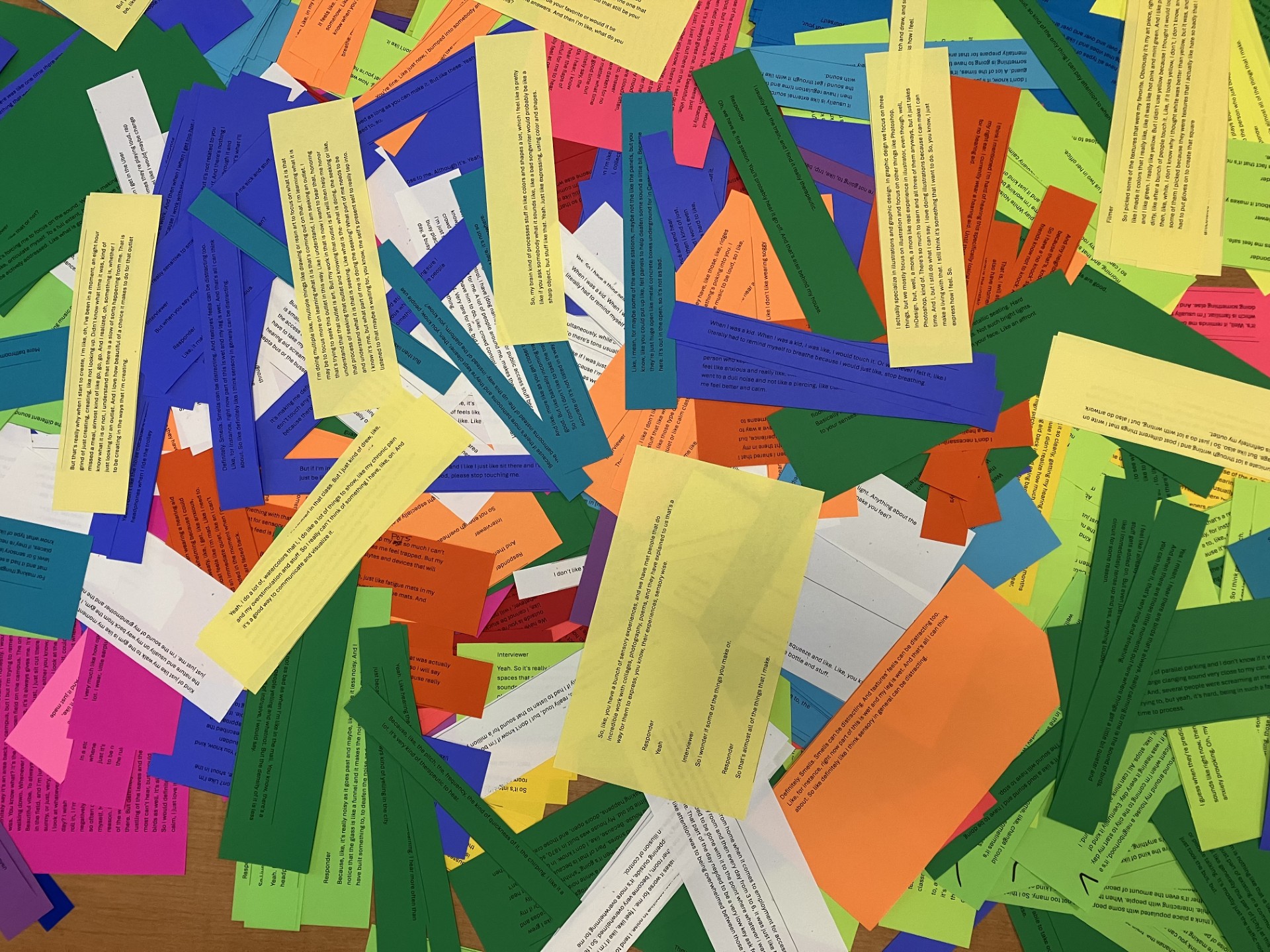

The collaboration of the artists with population and behavioral health experts also informed the format of the interviews in traveling/moving with the participants in various urban typologies, so they have a first-hand reaction to environmental stimuli. The responses to the semi-structured interview questions throughout the visit underwent a multi-step coding and thematic analysis process with two coders from different disciplines to ensure inter-coder reliability and provide a balanced perspective. These methods, published in the form of a paper (forthcoming), and the resulting short documentary film can be referenced by others. The installation and associated research contribute knowledge on the sensory and sonic challenges of autistic individuals and could inform interventions and urban planning efforts to make cities more comfortable places for all people.

Adaptability / interoperability:

These technologies inform adaptive spatial features—such as dynamic lighting and space sonification – and, with further development, have long-term potential across a wide range of architectural typologies (e.g., hospitals, schools, museums, stadiums) and urban environments (e.g., transit systems, park networks, and underutilized public spaces).

Impact

This integrated toolset enables real-time, immersive interaction between participants and the installation. It bridges computer vision, mmWave radar sensing, sentiment analysis, and physical media responses, creating a responsive environment that blends digital and physical elements.

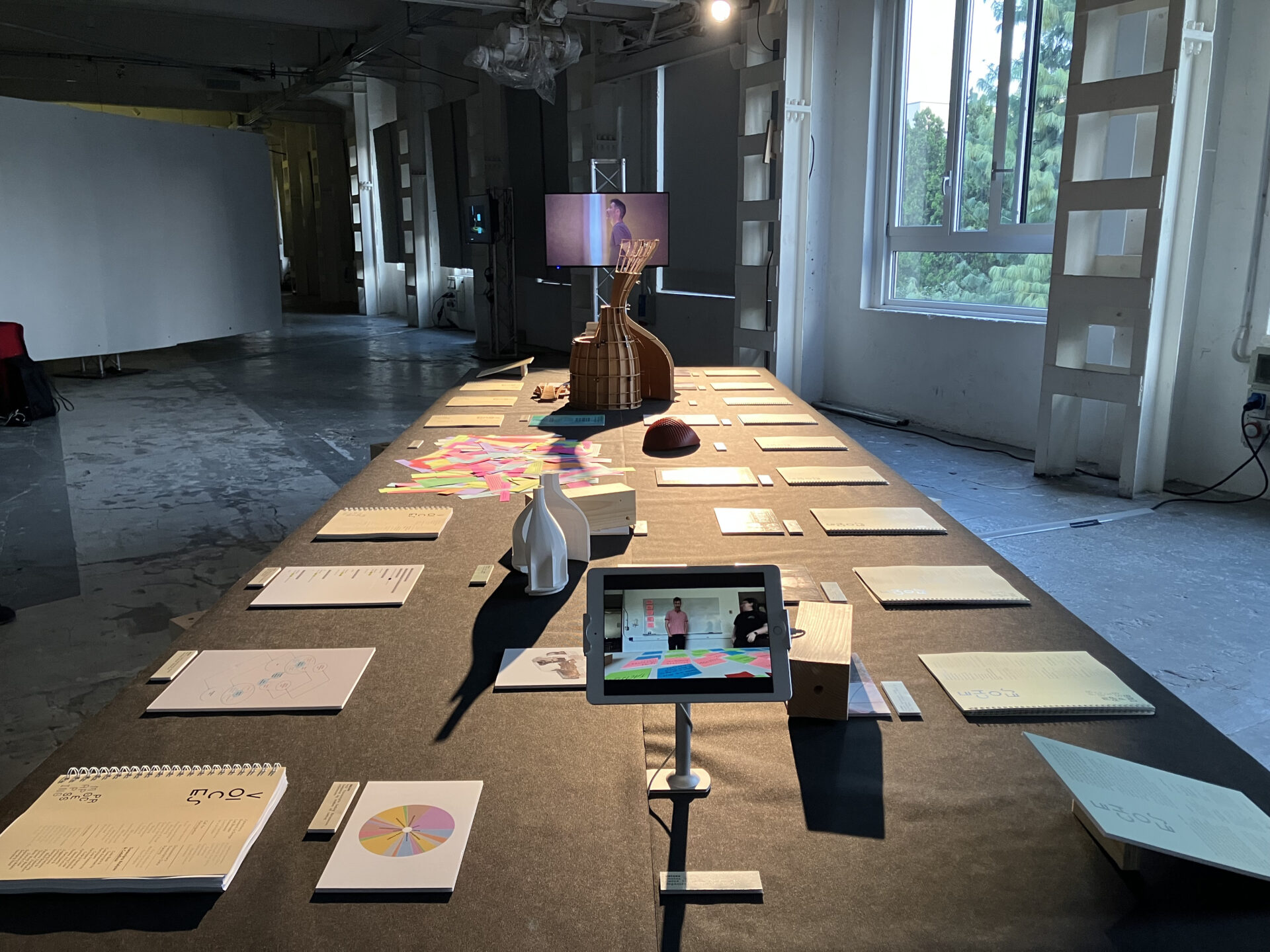

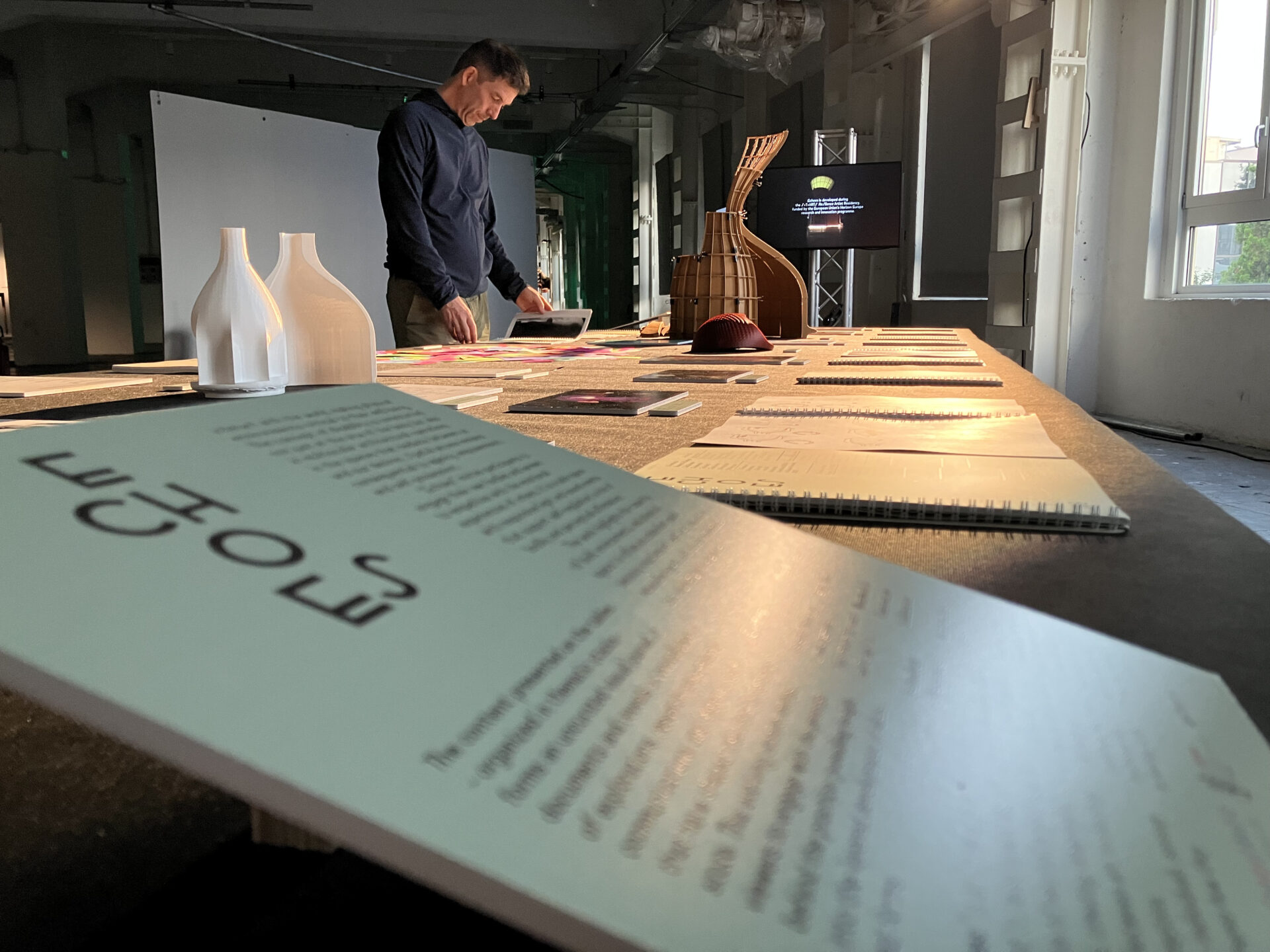

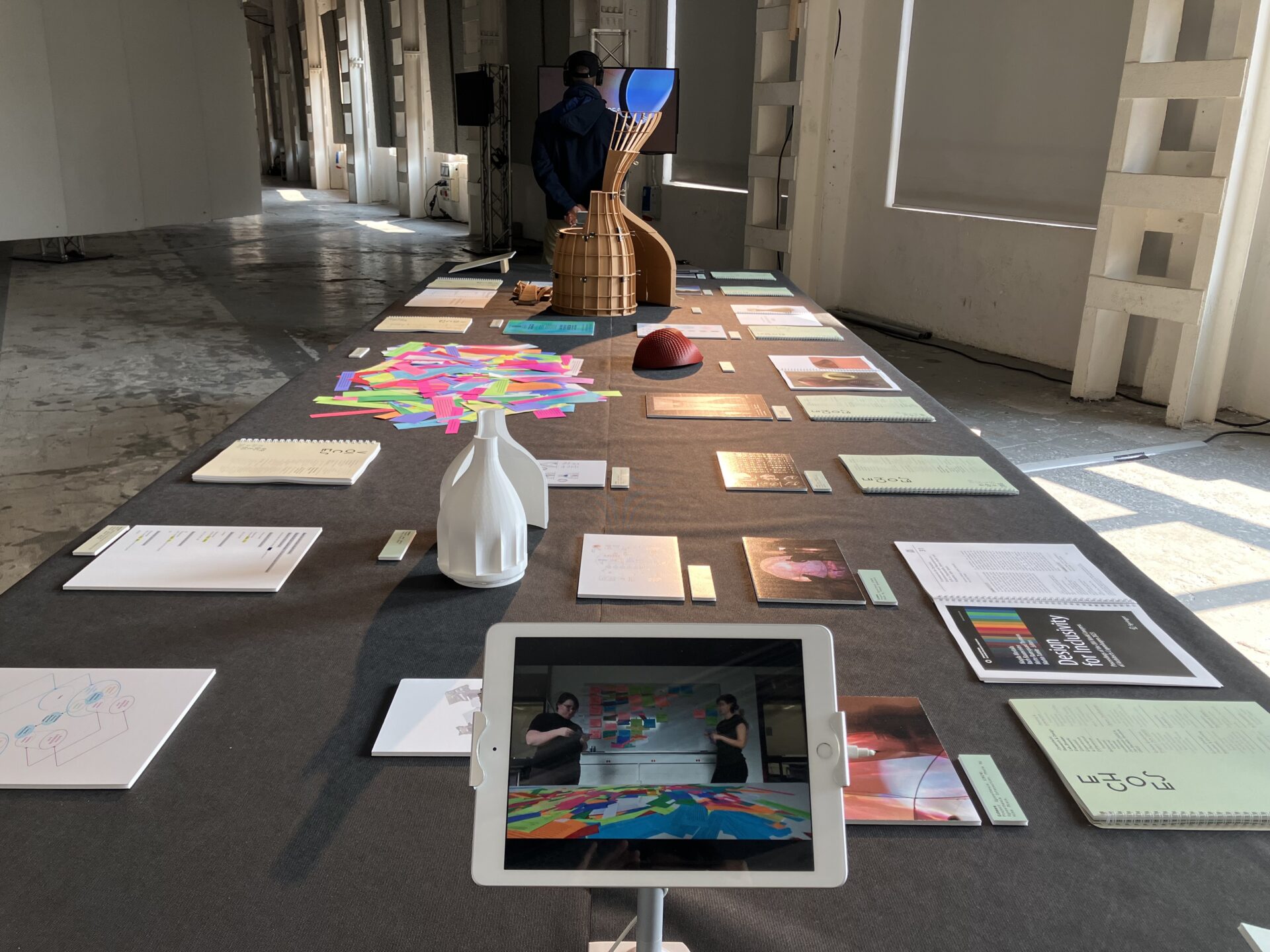

“Echoes” overall experience.

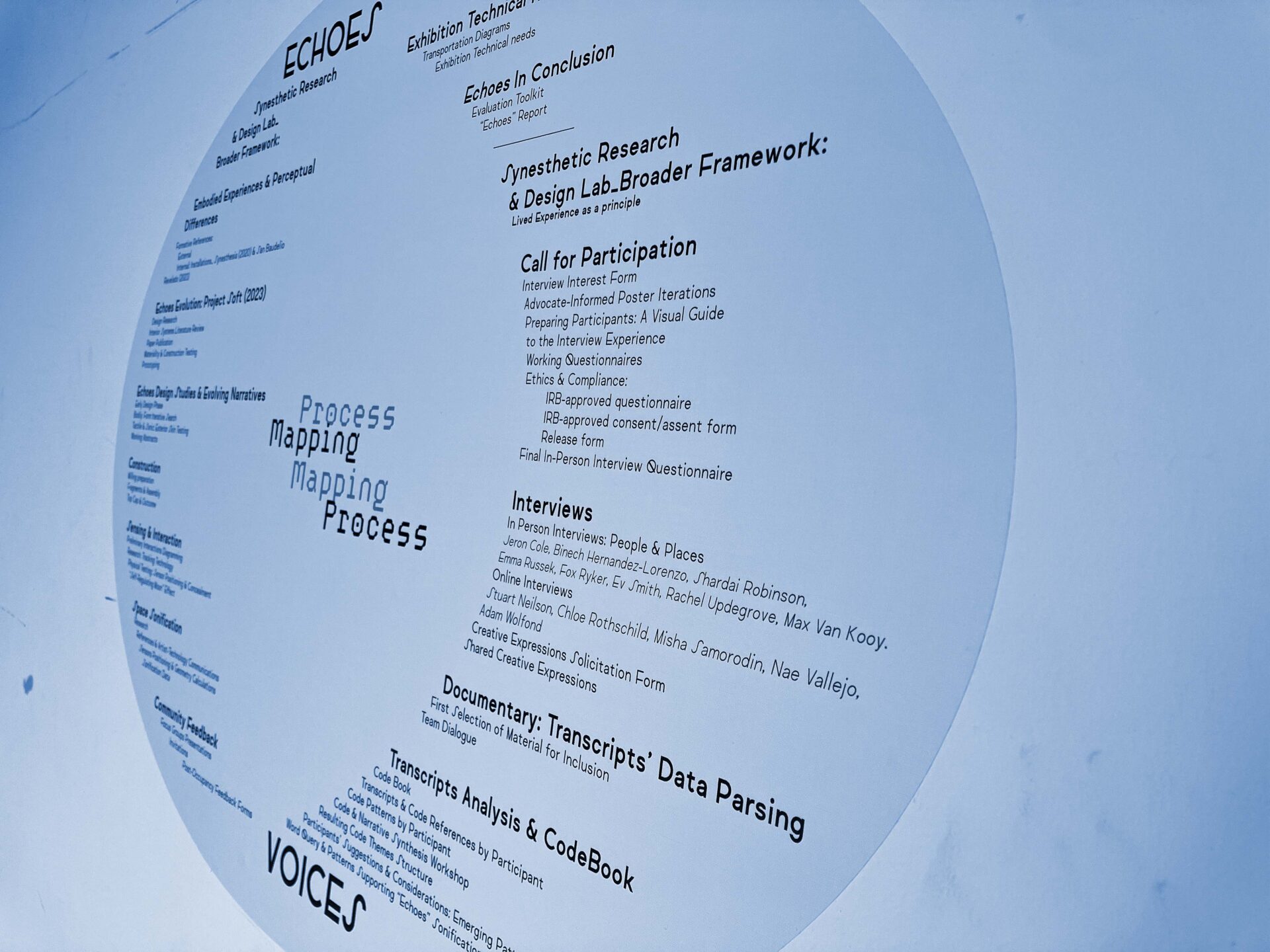

“Echoes” process.

“Echoes” overall experience.

VOICES transcripts coding workshop.

Echoes’ interior environment with self-reflecting moon and embodied ambient sound activated by the visitor’s movement and respiration. The space-body sonification stems from research on sounds comforting to autistic individuals.

Static view of the exterior of Echoes with slightly changing light, echoing the presence of a person inside the installation